|

||

Thesis

- Open Technologies for an Open World

Open

Standards, Open Source, Open Mind

|

For a better understanding of the further topics, let's start by discussing this infrastructure and analyse the status of the hardware and basic software. This analysis will also solidify the definition of architecture, standards and protocols, to be used on the following chapters. We will see, for example, that new concepts as Open Source and ASP are largely inspired in their parents Open Systems and Time Sharing. |

|||

|

Infrastructure

|

|||

|

2. Open Infrastructure "Technology is neither good nor bad, nor is it neutral" - Melvin Kranzberg According to Patrick Gerland, "the three essentials of computer technology are: hardware - the physical machinery that stores and processes information -, software - the programs and procedures that orchestrate and control the operation of hardware - and people who know what the hardware and software can do and who accordingly design and implement applications that exert appropriate commands and controls over hardware and software to achieve desired results". Despite the broad discussion about open source and its influence on the evolution of hardware and software, there's a general lack of knowledge about the different types of operating systems, their original and current targets, and their relationship with the hardware platforms and architectures. For a better understanding of the further topics, let's start by discussing this infrastructure and analyse the status of the hardware and basic software. This analysis will also solidify the definition of architecture, standards and protocols, to be used on the following chapters. We will see, for example, that new concepts as Open Source and ASP are largely inspired in their parents Open Systems and Time Sharing. The hardware and software components are sometimes generically referred to as middleware. 2.1. Hardware Hardware is a broad term. It covers all the equipment used to process information electronically, and it's traditionally divided into two main categories: computers and peripherals . In this section, we will concentrate our analysis in the evolution of the computers, from owned machines into commodities. Peripheral references to the peripherals will be done. Let us consider the division of the computer into servers and clients, discuss the traditional server families, and the new concept of servers. After, we will briefly analyse the machines in the client-side. 2.1.1. Traditional Server Families In the beginning, they were simply called computers. And they were large-scale computers. In the mid-1980's, after the surge of the personal computers, we started to classify the computers according to their capacity, their price, their "original" goal and their target users. This classification remained until the end of the 1990s, and the three different families will now be briefly described. Let's not talk about the big computers filling entire rooms, weighting tons and composed by valves . This is not a mainframe any more, for a long time. Let's think about relatively small servers (they are often not larger than a fridge) with big power capacity, enough to run online applications for thousands of users spread over different countries, and to process batch jobs which can process millions of nightly database updates. They have never been sexy (and this was not the goal: we are talking about a period between 1970 and 1985, when graphics, colours, sound and animation were not part of the requirements for a professional computer application) and today it seems that the only alternative for them to survive is to mimic and co-operate with open systems environments. Usually mainframe applications are referred to as "legacy" ("Something received from the past "). One can argue the usage of this term , mainly because of the undeniable stability and performance of these machines and considering that most of the core applications for big companies are still processed using mainframe computers, connected with computers from other platforms to get profit from a better user interface. Current mainframe brands are IBM zSeries, Hitachi M-Series, Fujitsu-Siemens PrimePower 2000 and BS2000 and Ahmdal Millenium . The term mainframe is also being marketed by some vendors to designate high-end servers, which are a direct evolution from the mid-range servers, with a processing capacity (and price) aiming to compete with the traditional mainframes.

· Mid-range servers This is a difficult assumption to make. The capacity of the machines in this category is increasing fast, and they are already claiming to be high-end servers, and winning market share from the mainframes. Let's consider it a separate category, for the moment. Once upon a time, there were the minicomputers . They used to fill the mid-range area between micros and mainframes. As of 2001, the term minicomputer is no longer used for the mid-range computer systems, and most are now referred to simply as servers . Mid-range servers are machines able to perform complex processing, with distributed databases, multi-processing and multi-tasking, and deliver service to the same number of users than a high-end server, but with lower cost and often with an inferior level of protection, stability and security. The main suppliers in this area are HP and Sun.

· Low-range servers Initially the microcomputers were used as desktop, single-user machines. Then they started to assume functions from the minicomputers, like multi-tasking and multi-processing. Nowadays they can provide services to several users, host web applications, function as e-mail and database servers. Initially each server was used for a different function, and they were connected via the network. This is called multi-tier server architecture. With the evolution of the processing speed, today each server may be used for several functions. They may also work as metaframes, providing service to users connected via client or network computers. All the applications run in the server side (that is required to have a very good processing capacity), which exchange the screens with the clients (that can be relatively slow). There are several suppliers in this area, like Dell and Compaq.

Figure 6 - Traditional Server families 2.1.2. Servers - The new generation The classification categories discussed before are becoming more difficult to define and the division lines are blurring. The new generation of servers span a large range of price and processing capacity, starting by low-entry models, until powerful high-end servers, which can be tightly connected into clusters. This methodology is implemented - in different ways - by the most part of the hardware suppliers in the server market.

The differences between the entry-level and high-end servers depend on the architecture and the supplier. They may imply the number of parallel processors, the type and capacity of each processor, the memory available, cache, and connections with the peripherals. The clusters are normally used for applications demanding intensive calculations, and are composed by several servers connected by the usage of high-speed channels (when they are situated in the same space) or high-speed network links (when the servers are geographically dispersed to provide high availability in cases of natural disasters). There are many different ways to implement the clusters to obtain a single point of control and maintenance, treating the cluster almost as a single server - without creating a single point of failure, which could imply the complete unavailability of the cluster in case of problems with one of the elements. Estimate the throughput for future applications is only possible with a good capacity planning, prepared by experimented specialists. To find a server - as performance tests with the real charge are quite difficult to be elaborated - there are several benchmarks, organized by recognized independent companies, each one with a clear advantage for a different supplier. As the cost is extremely important - and when the hardware supplier participates in the capacity planning - a Service Level Agreement (SLA) may specify guarantees that the capacity installed will satisfy the estimated demand. However, another differentiating factor, which is gaining importance nowadays, is the openness of the hardware and operating systems. This will be largely exploited further in this document. 2.1.3. Autonomic grid on demand The current challenge, in the quest for the holy server, is to build flexible, scalable and resilient infrastructures, able to respond to unexpected surges in traffic and use. The solution may be found by implementing concepts like grid computing and autonomic computing, which are highly dependent on open standards and tightly related to open source. Grid computing is a new, service-oriented architecture that embraces heterogeneous systems and involves yoking together many cheap low-power computers - dispersed geographically - via open standards, to create a system with the high processing power typical of a large supercomputer, at a fraction of the price. The goal is to link - mainly through the Internet - computers and peripherals, by cumulating their processing and stocking capacities. All the connected systems may then profit from the set of assembled resources - like processing capacity, memory, disk, tapes, software and data - that are global and virtual at the same time. The challenges are to connect different machines and standards, to develop software able to manage and distribute the resources over the network, to create a development platform enabling programs to make profit of the parallel and distributed tasks, security issues (authentication, authorisation and policies) and to develop reliable and fast networks. The basic principle is not new, as the HPC (high performance computing) technology is already used in academic and research settings, and the peer-to-peer has already been used to create a large network of personal computers (e.g. SETI@home). The target now is to expand this technology to the business area via associations like the Global Grid Forum (assembling more than 200 universities, laboratories and private companies). Another initiative is the Globus project, formed by many American research institutes, responsible for Globus toolkit, which uses Open Source concepts for the basic software development, and has created the Open Grid Services Architecture (OGSA), a set of open and published specifications and standards for grid computing (including SOAP, WSDL and XML). The OGSA version 1.0 has been approved in December 2002. Other similar projects are Utility Computing (HP) and N1 (Sun) . With the costs to acquire, deploy, and maintain an expanding number of servers weighing down potential productivity gains, the market is shifting toward various concepts of service-centric computing. This may hold deep potential to not only lower the capital and operational costs of a data centre, but also to impart that infrastructure with the increased availability and agility to respond to an ever-changing business environment. As IT systems become more complex and difficult to maintain, alternative technologies must manage and improve its own operation with minimal human intervention. Autonomic computing is focused in making software and hardware that are self-optimising , self-configuring , self-protecting and self-healing . It is similar to the grid concepts, by embracing the development of intelligent, open systems that are capable of adapting to varying circumstances and preparing resources to efficiently handle the workloads placed upon them. Autonomic computers aren't a separate category of products. Rather, such capabilities are appearing in a growing number of systems. The objective is to help companies more easily and cost-effectively manage their grid computing systems. It's expected that when autonomic computing reaches its full potential, information systems will run themselves based on set business policies and objectives. The implementation of such a system start with a trial phase, in which the system suggests actions and then wait for approval. After a fine-tuning of the rules, the system may run unattended. It's

believed that only a holistic, standards-based approach can achieve

the full benefits of autonomic computing. Several standards bodies

- including the Internet Engineering Task Force, Distributed Management

Task Force and Global Grid Forum - are working together with private

companies to leverage existing standards and develop new standards

where none exist. Existing and emerging standards relevant to autonomic

computing include: One example of current technologies using autonomic components is disk servers with predictive failure analysis and pre-emptive RAID reconstructs, which are designed to monitor the system health and to detect potential problems or systems errors before harming the data. Performance optimisation is also obtained via intelligent cache management and I/O prioritisation . 2.1.4. Clients · Desktops (and laptops) In the beginning of the personal computers, hobby was the main objective, and there were several different platforms (Amiga, Commodore, TRS80, Sinclair, Apple, among others) completely incompatible. BASIC was the common language, although with different implementations. Then, in 1981, IBM created the PC - the first small computer to be able to run business applications - and in 1984, Apple released the Macintosh - the first popular graphical platform. The era of microcomputers started. The natural evolution of the microcomputers, today's desktops are able to run powerful stand-alone applications, like word processing and multimedia production. They are normally based on Intel or Macintosh platforms and used by small businesses or home users. They are much more powerful when connected to one or more servers, via network or the Internet. They become clients, and can be used to prepare and generate requests that are processed by the servers, and then receive, format and display the results. Most of the desktop market is dominated by computers originated from the IBM PC architecture, built from several suppliers (and even sold in individual parts) around Intel and AMD processors. Apple is also present - with a different architecture - traditionally in the publishing and multimedia productions.

· Network Computing Recent evolutions from the desktops, the Network Computers (also called WebPC or NetPC) are also used as clients, with less power and more flexibility. Normally these computers have built-in software, which is able to connect to the network and fetch the needed applications from the servers, or simply use the applications running on the servers themselves. The main objective is to allow shops and sales persons to work from anywhere in the world in the same way, with the same profile, even if changing the hardware interface. It also allows a better level of control over personalization, as all the information is always stored in the server, the client working simply as the interface. Main suppliers are IBM and Compaq, with network computers working as PC clients, and Citrix with a proprietary client able to connect only to Citrix servers and obtain a perfect image of PC client applications via the network. Similar technology can be found under the names "thin clients" and "smart display". To support the development of network computing, the company Sun developed a new language (Java ), aiming to guarantee the independence of the hardware equipment or platform by the usage of Virtual Machines .

The idea is to connected small devices than laptops to the network. The PDAs are largely used today, and have the capacity to work in network, even if the communication costs avoid many to use them to send e-mails and to connect to the Internet. With the decreasing size of the chips, and with the development of wireless protocols - like bluetooth and WiFi - some technology "hype-makers" are trying to convince the general public that they need every single appliance connected to the network. Although with some interesting applications, most of them are simply gadgets. With the current economic crisis, there is a low probability that such products will have a viable commercialisation soon. Some companies are using these technologies in warehouse management systems, to reduce costs and increase the control on stock and product tracing. Also current is the usage of Linux in set-top boxes, able to select TV channels, save programs in hard disks for later viewing, filter channel viewings according to license keys. See

also chapter 2.3.4 (Trend: Open Spectrum). 2.2. Operating Systems As we already discussed, hardware is the equipment used to process information. An Operating System is the system software responsible to manage the hardware resources (memory, Input/Output operations, processors and peripherals), and to create an interface between these resources and the operator or end-user . Originally,

each hardware platform could run its own operating system family.

This is not true any more. Here we are going to briefly analyse some

examples of well-known operating systems, and present some historical

facts related to standards, architectures and open source. Later we

are going to discuss the initial dependence between the operating

systems and the architecture, and how this has changed.

§ z/VM was originally built to ease the machine resources sharing by creating simulated computers (virtual machines), each running its own operating system . § z/OS - has always been the operating system used by large IBM systems, offering a great level of availability and manageability. · Architecture IBM has been the leader of the mainframe commercial market since the very beginning, with strong investments in R&D and marketing strategies, and with a determination to use common programming languages (like COBOL) in the conquest of programmers and customers. One key factor for IBM's success is standardization. In the 1950s, each computer system was uniquely designed to address specific applications and to fit within narrow price ranges. The lack of compatibility among these systems, with the consequent huge efforts when migrating from one computer to another, motivated IBM to define a new "architecture" as the common base for a whole family of computers. It was known as the System/360, announced on April 1964 , which allowed the customers to start using a low-cost version of the family, and upgrade to larger systems if their needs grew. Scalable architecture completely reshaped the industry. This architecture introduced a number of the standards for the industry - such as 8-bit bytes and the EBCDIC character set - and later evolved to the S/370 (1970), 370-XA (1981), ESA/370 (1988), S/390 (1990) and z/Architecture (2000). All these architectures were backward compatible, allowing the programs to run longer with less or no adaptation, while convincing the customers to migrate to the new machines and operating systems versions to profit from new technologies. The standardization of the hardware platforms, by using a common architecture, helped to unify the efforts from the different IBM departments and laboratories spread over the world. The usage of a common set of basic rules was also fundamental in the communication among the hardware and software departments, which allowed the existence of the three different operating system families, able to run in the same hardware. · Relative Openness This architecture is proprietary (privately owned and controlled - the control over its use, distribution, or modification is retained by IBM), but its principles and rules are well defined and available to the customers, suppliers and even the competitors . This openness allowed its expansion by: § Hardware - Other companies (OEM - Original Equipment Manufacturer) started to build hardware equipment full compatible with the IBM architecture (thus allowing its use together with IBM hardware and software), and often with advantages on pricing, performance or additional functions. This gave a real boost on the IBM architecture, by giving the customer the advantage of having a choice, while enforcing the usage of its standards and helping it to sell even more software and hardware. § Software - Initially IBM was dedicated to building operating systems, compilers and basic tools. Many companies (ISV - Independent Software Vendors) started to develop software to complement this basic package, allowing the construction of a complete environment. The end-user could then concentrate in the development of its applications, while buying the operating system and the complementary tools from IBM and the ISV. § Services - As the IBM architecture became the standard for large systems, consulting companies could then specialize in this market segment, providing standard service offerings around the implementation of the hardware components, operating systems and software. With the recent increase on the demand for this type of service, mainly due to the Y2K problem, the Euro implementation and the outsourcing hype, most of the infrastructure services in large environments have been done by external consultants . · The negative aspects There's also a dark side: several times, IBM has been accused of changing the architecture without giving enough time to the competitors to adapt their hardware and software. Only when the competition from other high-end servers suppliers increased, IBM has been forced to review this policy, creating the concept of "ServerPac". The operating system started to be bundled with ISV software, giving the user a certain guarantee of compatibility. A second problem is the complete ownership of the standards, by IBM. Even if other companies found good ways of implementing new technologies, thus improving the quality of the service or the performance, they always needed to adapt their findings to fit in the IBM architecture . This limited the innovation coming from the OEM companies as they could rarely think about improvements that would imply architectural changes. On the other hand, the customers were never sure if the changes imposed by IBM were really needed, or simply another way of forcing them to upgrade the operating systems version or the hardware equipment with an obvious financial benefit for IBM. A third aspect is related to the availability of the code source of the operating systems and basic software. Originally, IBM supplied the software with most part of the source code and a good technical documentation about the software's internal structures. This gave the customers a better knowledge about the architecture and the operating systems, allowing them to analyse problems independently, to drive their understanding beyond the documentation, and to elaborate routines close to the operating systems. After, they created the "OCO" (Object Code Only) concept, which persists today. The official reason behind this was the difficulty for IBM to analyse software problems, due to the increasing number of customers that started to modify the IBM software to adapt them to local needs, or to simply correct bugs. In fact, by hiding the source code IBM also avoided that customers discovered flaws and developed interesting performance upgrades. This also helped to decrease the knowledge level from the technical staff, which is limited today to follow the instructions given by IBM, when installing and maintaining the software. The direct consequence was a lack of interest for the real system programmers and students, who have been attracted to UNIX and Open Source environments. A positive consequence of the OCO policy is the quicker migration from release to release. Since the code is not modified, but instead APIs (Application Programming Interfaces ) can be used to adapt the system to each customer needs. · Impacts These factors were crucial to create a phenomenon called downsizing: Several customers, unhappy with the monopoly in the large systems, and with the high prices practiced by IBM and followed by the OEM and ISV suppliers, started to migrate their centralized (also called enterprise) systems to distributed environments, by using midrange platforms like UNIX. In parallel (as it will be discussed on chapter 2.2.2), there were many developments of applications and basic software in the UNIX platforms, the most important around the Internet. To counterattack, IBM decided to enable UNIX applications to run in their mainframe platforms: MVS (the Open Edition environment, now called UNIX System Services) and VM (by running Linux as guest operating systems in virtual machines). IBM major effort today is to create easy bridges between those application environments, and to convince the customers to web-enable the old applications, instead of rewriting them. Important is to notice that the standardisation on the hardware level was not abstracted to the operating system level. As mentioned above IBM always maintained three different operating systems, each one targeted to a different set of customers. This was not always what IBM desired, and in every implementation of a new architecture level (e.g. XA, ESA), the rumours were that the customers would be forced to migrate from VM and VSE to MVS, which would become the only supported environment. This is still true for the VSE (note that there's no z/VSE announced yet), but z/VM became a strategic environment allowing IBM to implement Linux in all hardware platforms. Usually,

the mainframe implementations are highly standardized. As the professionals

in this domain understood the need for standards, the creation of

company policies is done even before installing the systems. 2.2.2.

UNIX

An

analysed by Giovinazzo, "while it may seem by today's standards

that a universal operating system like UNIX was inevitable, this was

not always the case. Back in that era there were a great deal of cynicism

concerning the possibility of a single operating system that would

be supported by all platforms. (…) Today, UNIX support is table

stakes for any Independent Software Vendor (ISV) that wants to develop

an enterprise class solution" . · Portability The "C" language has been created under UNIX and then - in a revolutionary exploit of recursion - the UNIX system itself has been rewritten in "C". This language was extremely efficient while relatively small and started to be ported to other platforms, allowing the same to happen with UNIX. Besides portability, another important characteristic of the UNIX system was its simplicity (the C logical structure could be learnt quickly, and UNIX was structured as a flexible toolkit of simple programs). Teachers and students found on it a good way to study the very principles of operating systems, while learning UNIX more deeply, and quickly spreading UNIX principles and advantages to the market. This helped UNIX to become the ARPANET (and later Internet) operating system by excellence. The universities started to migrate from proprietary systems to the new open environment, establishing a standard way to work and communicate. · Distribution method Probably one of the UNIX most innovations was its original distribution method. AT&T could not market computer products so they distributed UNIX in source code, to educational institutions, at no charge. Each site that obtained UNIX could modify or add new functions, by creating a personalized copy of the system. They quickly started to share these new functions and the system become adaptable to a very wide range of computing tasks, including many completely unanticipated by the designers. Initiated by Ken Thompson , students and professors from the University of California-Berkeley continued to enhance UNIX, creating the BSD (Berkeley Software Distribution) Version 4.2, and distributing it to many other universities. AT&T distributed their own version, and were the only able to distribute commercial copies. In the early 80s the microchip and local-area network started to have an important impact on the UNIX evolution. Sun Microsystems used the Motorola 68000 chip to provide an inexpensive hardware for UNIX. Berkeley UNIX developed built-in support for the DARPA Internet protocols, which encouraged further growth of the Internet . "X Window" provided the standard for graphic workstations. By 1984 AT&T started to commercialise UNIX. "What made UNIX popular for business applications was its timesharing, multitasking capability, permitting many people to use the mini- or mainframe; its portability across different vendor's machines; and its e-mail capability" .

Several computer manufacturing companies - like Sun, HP, DEC and Siemens - adapted AT&T and BSD UNIX distributions to their own machines, trying to seduce new users by developing new functions to benefit from hardware differences. Of course, the more differences between the UNIX distributions, more difficult to migrate between platforms. The customers started to be trapped, like in all other computer families. As the versions of UNIX grew in number, the UNIX System Group (USG), which had been formed in the 1970s as a support organization for the internal Bell System use of UNIX, was reorganized as the UNIX Software Operation (USO) in 1989. The USO made several UNIX distributions of its own - to academia and to some commercial and government users -and then was merged with UNIX Systems Laboratories, to become an AT&T subsidiary. AT&T entered into an alliance with Sun Microsystems to bring the best features from the many versions of UNIX into a single unified system. While many applauded this decision, one group of UNIX licensees expressed the fear that Sun would have a commercial advantage over the rest of the licensees. The concerned group, leaded by Berkeley, in 1988 formed a special interest group, the Open Systems Foundation (OSF), to lobby for an "open" UNIX within the UNIX community. Soon several large companies also joined the OSF. In response, AT&T and a second group of licensees formed their own group, UNIX International. Several negotiations took place, and the commercial aspects seemed to be more important than the technical ones. The impact of losing the war was obvious: important adaptations should be done in the complementary routines developed, some functions - together with some advantages - should be suppressed, some hardware innovative techniques should be abandoned in the name of the standardization. When efforts failed to bring the two groups together, each one brought out its own version of an "open" UNIX. This dispute could be viewed two ways: positively, since the number of UNIX versions were now reduced to two; or negatively, since there now were two more versions of UNIX to add to the existing ones. In the meantime, the X/Open Company - company formed in guise to define a comprehensive open systems environment - held the centre ground. X/Open chose the UNIX system as the platform for the basis of open systems and started the process of standardizing the APIs necessary for an open operating system specification. In addition, it looked at areas of the system beyond the operating system level where a standard approach would add value for supplier and customer alike, developing or adopting specifications for languages, database connectivity, networking and connections with the mainframe platforms. The results of this work were published in successive X/Open Portability Guides (XPG). · The Single UNIX Specification In December 1993, one specification was delivered to X/Open for fast track processing. The publication of the Spec 1170 work as the proper industry supported specification occurred in October 1994. In 1995 X/Open introduced the UNIX 95 brand for computer systems guaranteed to meet the Single UNIX Specification. On 1998 the Open Group introduces the UNIX 98 family of brands, including Base, Workstation and Server. First UNIX 98 registered products shipped by Sun, IBM and NCR. There is now a single, open, consensus specification, under the brand X/Open® UNIX. Both the specification and the trademark are now managed and held in trust for the industry by X/Open Company. There are many competing products, all implemented against the Single UNIX Specification, ensuring competition and vendor choice. There are different technology suppliers, which vendors can license and build their own product, all of them implementing the Single UNIX Specification. Among others, the main definitions under this specification on version 3 - which is a result of IEEE POSIX, The Open Group and the industry efforts - are the definitions (XBD), the commands and utilities (XCU), the system interfaces and headers (XSH) and the networking services. They are part of the X/Open CAE (Common Applications Environment) document set. In November 2002, the joint revision to POSIX® and the Single UNIX® specification have been approved as an International Standard . · Today The Single UNIX Specification brand program has now achieved critical mass: vendors whose products have met the demanding criteria now account for the majority of UNIX systems by value. UNIX-based systems are sold today by a number of companies . UNIX is a perfect example of a constructive way of thinking (and mainly: acting!), and it proves that the academia and commercial companies can act together, by using open standards regulated by independent organisations, to construct an open platform and stimulate technological innovation. Companies using UNIX systems, software and hardware compliant with the X/Open specifications can be less dependent of the suppliers. Migration to another compliant product is always possible. One can argue that, in cases of intensive usage of "non-compliant" extra features, the activities required for a migration can demand a huge effort. 2.2.3.

Linux

This discussion is not completely separated from the previous one. Developed by Linus Torvalds, Linux is a product that mimics the form and function of a UNIX system , but is not derived from licensed source code. Rather, it was developed independently by a group of developers in an informal alliance on the net (peer to peer). A major benefit is that the source code is freely available (under the GNU copyleft), enabling the technically astute to alter and amend the system; it also means that there are many, freely available, utilities and specialist drivers available on the net. · Brief Hacker History The hackers - not to be confounded with the "crackers" - appeared in the early sixties and define themselves as people who "program enthusiastically" with "an ethical duty (…) to share their expertise by writing free software and facilitating access to information and computing resources wherever possible" . After the collapse of the first software-sharing community - the MIT Artificial Intelligence Lab - the hacker Richard Stallman quit his job at MIT in 1984 and started to work on the GNU system. It was aimed to be a free operating system, compatible with UNIX. "Even if GNU had no technical advantage over UNIX, it would have a social advantage, allowing users to cooperate, and an ethical advantage, respecting the user's freedom" . He started by gathering pieces of free software, adapting them, and developing the missing parts, like a compiler for the C language and a powerful editor (EMACS). In 1985, the Free Software Foundation has been created and by 1990, the GNU system was almost complete. The only major missing component was the kernel. In 1991, Linus Torvalds developed a free UNIX kernel using the FSF toolkit. Around 1992, the combination of Linux and GNU resulted in a complete free operating system, and by late 1993, GNU/Linux could compete on stability and reliability with many commercial UNIX versions, and hosted more software. Linux was based on good design principles and a good development model. By opposition to the typical organisation - where any complex software was developed in a carefully coordinated way by a relatively small group of people - Linux was developed by a huge numbers of volunteers coordinating through the Internet. Portability was not the original goal. Conceived originally to run on a personal computer (386 processor), later on some people ported the Linux kernel to the Motorola 68000 series - used in early personal computers - using an Amiga computer. Nevertheless, the serious effort was to port Linux to the DEC Alpha machine. The entire code has been reworked in such a modular way that future ports were simplified, and started to appear quickly. This modularity was essential for the new open-source development model, by allowing several people to work in parallel without risk of interference. It was also easier for a limited group of people - still coordinated by Torvalds - to receive all modified modules and integrate into a new kernel version . · To be or not to BSD Besides Linux, there are many freely available UNIX and UNIX-compatible implementations, such as OpenBSD, FreeBSD and NetBSD. According to Gartner, "Most BSD systems have liberal open source licenses, 10 years' more history than Linux, and great reliability and efficiency. But while Linux basks in the spotlight, BSD is invisible from corporate IT" . BSDI is an independent company that markets products derived from the Berkeley Systems Distribution (BSD), developed at the University of California at Berkeley in the 60's and 70's. It is the operating system of choice for many Internet service providers. It is, as with Linux, not a registered UNIX system, though in this case there is a common code heritage if one looks far enough back in history. The creators of FreeBSD started with the source code from the Berkeley UNIX. Its kernel is directly descended from that source code.

Recursion or fate, the fact is that Linux history is following the same steps than UNIX. As it was not developed using the source from one of the UNIX distributions, Linux have several technical specifications that are not (and will probably never be ) compliant with UNIX98. Soon, Linux started to be distributed by several different companies worldwide - SuSE, Red Hat, MandrakeSoft, Caldera International / SCO and Conectiva, to name some -, bundled together with a plethora of open source software, normally in two different packages - one aiming at home users and another for companies. In 2000, two standardization groups appeared - LSB and LI18NUX - and have incorporated under the name Free Standards Group, "organized to accelerate the use and acceptance of open source technologies through the application, development and promotion of interoperability standards for open source development" . Caldera, Mandrake, Red hat and SuSE currently have versions compliant with the LSB certification . Still in an early format, the current standards are not enough to guarantee the compatibility among the different distributions. In a commercial maneuver - to reduce the development costs - SCO, Conectiva, SuSE and Turbolinux formed a consortium called UnitedLinux, a joint server operating system for enterprise deployment. The software and hardware vendors can concentrate on one major business distribution, instead of certifying to multiple distributions. This is expected to increase the availability of new technologies to Linux customers, and reduce the time needed for the development of drivers and interfaces. Consulting companies can also concentrate the efforts to provide more and new services. However, Red hat - the dominant seller in the enterprise market segment - has not been invited to join the UnitedLinux family before its announcement. Few expect it to join now. Also missing are MandrakeSoft and Sun Microsystems . With the help from important IT companies, it's expected from the Free Standards Group the same unifying role than the one played by the Open Group, which was essential for the UNIX common specification . This is very important to give more credibility for the companies willing to seriously use Linux on their production environments. As in the UNIX world, the Linux sellers could still keep their own set of complementary products, which would be one important factor to stimulate the competition. The distributions targeting the home users can continue separated, by promoting the diversity needed to stimulate creativity, and by letting the natural selection chose the best software to be elected for enterprise usage. Darwin would be happy. Also would the users.

Linux already proved to be a reliable, secure and efficient operating system, ranging from low entry up to high-end servers. It has the same difficulty level than other UNIX platforms, with the big advantage of being economically affordable - almost everybody can have it at home, and learn it by practice. However, is it technically possible for everyone to install it and use it? The main argument supporting all the open source software is that a good support can be obtained from service providers, besides the help from the open source community, by using Internet tools like newsgroups and discussion lists. Although, our question may have two different answers: One for servers, other for clients. It is certainly possible for companies to replace existing operating systems with Linux, and the effort needed is mainly related to the conversion of the applications than to the migration of the servers or the operating systems. Several companies have been created using Linux servers to reduce their initial costs, and they worked with specialized Linux people from the beginning. Most of the companies replacing other platforms by Linux already have a support team, with a technological background, responsible by the installation and maintenance of the servers. Part of this team has already played with Linux at home and has learnt the basic knowledge. The other part is often willing to learn it, compare with the other platforms, and to discover what it has that is seducing the world. The desktop users have a completely different scenario. This is because Linux is based on a real operating system, conceived to be installed and adapted by people with a good technical background, and willing to dedicate some time for this task. The main goal of most of the people (except probably the hackers themselves) installing Linux at home is to learn it, to play around, to compare with the other systems. The Linux main objective (by using the recursion so dear to the hackers) appears to be Linux itself. In its most recent releases, even if the installation process is not difficult as before, simple tasks as adding new hardware, playing a DVD, writing a CD or installing another piece of software, can quickly become nightmares. Linux can't yet match Windows on plug-and-play digital media or the more peripheral duties. One should continue to be optimist and hope that the next versions will finally be simpler. The open source community is working on it, via the creation of the Desktop Linux Consortium . · Ubiquity … and beyond! Linux

is considered the only operating system that will certainly run on

architectures that have not yet been invented . Four major segments

of Linux key marketplaces are: §

Clusters - Linux performs very well when connected in clusters (due

to its horizontal scalability), like the big supercomputing clusters

existing in universities and research labs. §

Appliances -Linux is found as an embedded operating system in all

kinds of new applications, like major network servers, file and print

servers, and quite a number of them new kinds of information appliances.

It's very cost effective, reliable and fast. In addition, the open

standards facilitate their operations.

The first Microsoft's product - in 1975 - was BASIC , installed in microcomputers used by hobbyists. In 1980, IBM started to develop the personal computer (PC) and invited Microsoft to participate in the project by creating the operating system. Microsoft bought, from a small company called Seattle Computer, a system called Q-DOS , and used it as a basis for MS-DOS, which became the operating system of choice, distributed by IBM as PC-DOS. By 1983, Microsoft announced their planning to bring graphical computing to the IBM PC with a product called Windows. As Bill Gates explains, "at that time two of the personal computers on the market had graphical capabilities: The XEROX Star and the Apple Lisa. Both were expensive, limited in capability, and built on proprietary hardware architectures. Other hardware companies couldn't license the operating systems to build compatible systems, and neither computer attracted many software companies to develop applications. Microsoft wanted to created an open standard and bring graphical capabilities to any computer that was running MS-DOS" . · First steps Microsoft worked together with Apple during the development of the Macintosh, and created for this platform their two first graphical products: a word processor (Microsoft Word) and a spreadsheet (Microsoft Excel). Microsoft also cooperated with IBM during the development of OS/2, which was aimed to become the graphic replacement for MS-DOS. Using this knowledge, and a lot of ideas from the XEROX research, Microsoft release the first version of Windows in November 1985 . The impact of OS/2 was very limited, as it did not provide full compatibility with the old MS-DOS software. On the other hand, Windows was a large success mainly because it supported the existing MS-DOS character-oriented applications, in parallel with exploiting the potentials from the new graphical software. Application support for Windows was initially sparse. To encourage earlier application support, Microsoft licensed a free of charge runtime version of Windows to developers, which made their programs available to end-users. This runtime version allowed use of the application although it did not give the benefits that the full Windows environment provided. With version 3.1, Windows acquired maturity, reducing the incredible number of bugs - mainly related with the user interface, and with the constant need of rebooting the system - and with the wide availability of graphic applications. Its popularity blossomed with the release of a completely new Windows software development kit (SDK) - which helped software developers focus more on writing applications and less on writing device drivers - and with the widespread acceptance among third-party hardware and software developers. The bugs are persistent, and today are the main source of virus by opening security flaws. · Different families with different editions Microsoft

decided to keep the compatibility with the MS-DOS applications to

avoid losing customers. However, this also avoided Windows desktop

systems to be stable, and reduced the pace of innovation. Microsoft

then started to develop a completely new desktop system from scratch,

oriented to business customers that needed more stability. After 1993

two different families of desktop operating systems co-existed: the

Windows 3x (that later evolved to Windows 9x and Windows Millennium),

descended from MS-DOS and the Windows NT (that later evolved to Windows2000),

completely built around the graphic interfaces and new hardware features.

In 2001, Microsoft abandoned the 3x family and used the base code

from NT to create the Windows XP, with two different packages and

prices: one "edition" aimed for home users, another for

professional use .

In July 1993, Microsoft inaugurated its Windows server family with the release of Windows NT Advanced server 3.1 . It was designed to act as a dedicated server (for tasks as Application, Mail, Database and Communications Server) in a client/server environment, for Novell NetWare, Banyan VINES, and Microsoft networks. The next releases improved the connectivity with UNIX environments (3.5), integrated a web server (4.0) and consolidated the web facilities with ASP (2000). To follow is the Windows .NET server , which aims to better exploit the XML Web services and the .NET framework. The different editions for the server families are Standard, Enterprise, Datacenter and Web . · Easy-to-use, easy-to-break The main advantage of the windows families - over Linux and UNIX - is their easy installation and operation. As we saw, Microsoft copied the concepts from Apple Macintosh and always based their windows products in graphic interfaces, with intuitive icons opening the doors for all the tasks, from running the applications to configuring the hardware and changing the software options, colours and sounds. This seems to be a major benefit for end users, that don't need to bother with learning the concepts of operating systems to be able to write a letter, scan some photos, mix everything and print. The problems start to appear when something changes, like the version of the operating system, a new hardware component or simply when an inoffensive application is installed or removed from the computer. These tasks often need an intervention from a specialist, or a complete reinstallation of the windows system. This happens even with the recent XP releases, despite Microsoft publicity stating the opposite. Finally, the main technical advantages disappear, and only the market dominance, compatibility of the document formats, and marketing strategies convince the user to keep the windows system, rather than installing Linux or buying a Macintosh system. · Embrace and Extend Microsoft strategy always consisted in identifying the good opportunities, react quickly and offer a solution (often without taking care of the quality) to conquer the market and later improve it to keep the customers. Again, as it was the case for IBM with the mainframes, marketing strategies revealed to be more important than technological features, paving the way for "de facto" standards. Windows is extremely important for Microsoft's marketing strategies, serving as a Trojan horse to introduce "free" Microsoft programs. This happened in 1995, when Internet Explorer quickly gained an impressive market place in a short time - again, instead of developing a concept and a product , Microsoft simply acquired Mosaic from the company Spyglass and make quick modifications transforming it into Internet Explorer. After, with the development of extensions to the standard HTML language, it almost eliminated Netscape from the market. This drive us to the conclusion that Microsoft practices aim to conquer a complete monopoly - not only for operating systems, but also for applications, appliances, the internet and content - through the complete elimination of the competition. This has already been analysed by the American and European justice departments. · Hardware Independence One of the main reasons behind Windows' success is the independence from the hardware supplier. If the processor chip is usually built by Intel and AMD, the computers can be manufactured by any company following the specifications from the PC architecture, or even assembled at home, by using standard components available in any electronic shop. On the other hand, the Apple Macintosh and IBM PS/2 architectures force the customer to buy the hardware and software from the same supplier. There's no compatibility with other vendors, thus the cost remains higher than the PC/Windows platforms, which benefit from the competition and the higher market share to decrease the production costs. Yet, the windows systems remain dependent of the PC architectures, and this may be a serious disadvantage by opposition to the portability offered by Linux systems. This is important to understand what is behind the strategies adopted by the Microsoft competitors, to be studied on the next chapter.

One important difference between the proprietary MS-DOS and Windows families and the open systems is not technical, but social. There is not such a concept as Windows hackers . Windows environments were conceived to run alone, not in networks. The UNIX systems have been built with strong networking facilities, since their first versions, what allowed creating a large community that used, analysed, developed and cooperated for its improvements. Moreover, the UNIX systems appeared in the academic world, which favoured this community to be extremely creative and looking for technological innovations. This concept, also known as peer-to-peer (P2P), obtained its climax with Linux, which has entirely constructed (and still evolves) through the Internet. As well defined by Eric Raymond , "the MS-DOS world remained blissful ignorant of all this. Though those early microcomputer enthusiasts quickly expanded to a population of DOS and Mac hackers order of magnitude greater than that of the 'network nation' culture, they never become self-aware culture themselves. The pace of change was so fast that fifty different technical cultures grew and died as rapidly as mayflies, never achieving quite the stability necessary to develop a common tradition of jargon, folklore and mythic history. (…) The fact that non-UNIX operating systems don't come bundled with development tools meant that very little source was passed over them. Thus, no tradition of collaborative hacking developed.". Complementary,

as Himanen explains, "Bill Gates (…) gained hacker respect

by programming his first interpreter of the BASIC programming language

(…) [but] in Microsoft's subsequent history, the profit motive

has taken precedent over the passion" . 2.2.5. Other Operating Systems Released in 1984 and integrating the hardware and software into the same platform, the Apple Macintosh was the first to have good graphical interfaces to perform all the basic functions. Apple refused (until 1995) to let anyone else make computer hardware that would run it, limiting its success and avoiding it to become the standard for graphical platforms. The technical comparison with PC and Windows is not easy to be done, and the eternal discussion is far from coming to an end. Apple addicts always considered the Mac as a more stable platform, and with best results with multimedia applications. The counterargument is that the modern windows / Intel systems improved their graphic and sound systems, with the help from external cards, and could produce the same quality. The reality today is that the Mac systems are more expensive, and are still preferred by designers and artists to produce graphic, music and images. The new version, MacOsX, is based in the UNIX operating system OpenStep, and implement Vector-based graphic interfaces. · IBM OS/2 It's surprising that IBM is still maintaining versions of their OS/2 Warp operating system. In 1984, after having realized the real market potentials of personal computing, IBM decided to create a close platform (PS/2) with a stronger architecture than the IBM/PC - with mainframe customers in mind - and a solid operating system (OS/2), to be able to run professional applications and compete with the DOS, Windows and Macintosh families. The smaller prices of the PC clones, which already had a large installed base, and were sold with an incorporated DOS/Windows system, avoid this to happen. Contrarily to IBM hopes, the price was a more important factor than reliability, and the OS/2 platform has never been largely used. · IBM OS/400 The

only one that can unequivocally be called a "minicomputer"

operating system, the OS/400 is the evolution of the IBM midrange

System/36 and System/38 systems, implemented on PowerPC chips. Despite

the lack of good technical reasons to keep both Unix and OS/400 platforms,

the large installed base of these systems is able to guarantee their

continuity. 2.2.6. Classification Considering two major criteria discussed in the previous paragraphs - scalability and openness - the operating systems can be classified by using the following chart:

Scalability is the ability of increasing the system throughput by adding processors or new systems, with a low impact in the efforts for managing the system (license costs and human resources). Linux outperform the other systems due to its capacity of running in virtually all hardware platform and machine sizes, its reduced cost, the openness of its source code and the usage of open standards and protocols.

The number of installed mainframe and UNIX systems for critical systems in large companies, in addition to the decades of experience and fine tuning, help these systems to have more confidence from IT specialists. The reduced initial setup costs for windows and Linux platforms justify their choice when price is an important element. Goldman Sachs considers Linux as "Enterprise Class", and estimates that Linux will soon replace windows in medium-large servers . The cost analysis and comparisons will be detailed on chapter 6.4. 2.3. Communication The co-existence of different standards on the hardware and software domains may stimulate the competition, the innovation and the market. For the network infrastructures, the absence of widely accepted standard and protocols can simply avoid the communication to happen in a global way. One of the first problems identified when the Internet ideas started to flourish was "to understand, design, and implement the protocols and procedures within the operating systems of each connected computer, in order to allow the use of the new network by the computers in sharing resources." The

free exchange of information via the Internet, independently of the

hardware or operating system we may use, is possible due to the previous

definition of standards, which we may classify in three different

tiers: The communication via the network, the addressing of each node

and the content formatting and browsing. Standard bodies are responsible

for their definition, publication and conformity. Let us discuss the

communication and addressing tiers. The content will be discussed

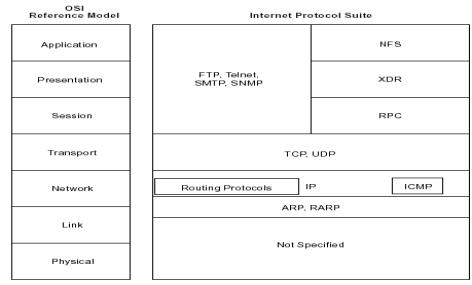

in details on the chapter 4. As we saw in chapter 2, the first mainframes and minicomputers performed very specific tasks, independent of any other computer, and the users and operators were connected locally, normally in the same building than the machines. When distant systems started to connect with each other, and to remote users, clear conventions needed to be established. · The OSI model The OSI reference model was developed by the International Organization for Standardization (ISO) in 1984, and is now considered the primary architectural model for inter-computer communications. OSI is a conceptual model composed of seven layers, each specifying particular network functions. It only provides a conceptual framework. Several communication protocols have been developed using it as a reference, allowing their interoperability. The seven layers of the OSI reference model can be divided into two categories: the upper layers (which deal with application issues and generally are implemented only in software) and the lower layers (which handle data transport issues). · The Internet Protocols In the mid-1970s, the Defense Advanced Research Projects Agency (DARPA) started to develop a packet-switched network that would facilitate communication between dissimilar computer systems at research institutions. The result of this development effort was the Internet protocol suite that contained several basic network protocols. Among others, Transmission Control Protocol (TCP) and the Internet Protocol (IP) are the two best known and commonly used together . One

of the main reasons of their quick adoption by the research institutions

(and later the whole market) was their openness. The Internet Protocol

suite is nonproprietary, born in an academic environment close to

the UNIX developments, and soon both work in synergy. TCP/IP was included

with Berkeley Software Distribution (BSD) UNIX to become the foundation

of the Internet . · SNA In 1974, IBM introduced a set of communications standards, called SNA , which was extremely convenient to the mainframes hierarchical topologies. Like other IBM definitions for hardware and operating systems architectures, it is a proprietary protocol, but clearly defined and open for the use of other manufacturers. This allowed IBM and many other companies to provide servers communication hardware and programming products, which could be connected by the usage of common communication definitions, hardware specifications and programming conventions. The administration of a SNA network is centralized, what improves its security while reducing its flexibility. IBM tried to evolve SNA to an open-standard known as High-Performance Routing (HPR), without success. SNA is still used in the core mainframe connections, although it is being replaced by TCP/IP in distant connections, due to pressures of intranets and the Internet, and for more flexibility. 2.3.2. Addressing A set of techniques is used to allow the identification of each single machine in the world, to allow the Internet packets to be distributed efficiently. Each computer with capability to be connected to the Internet receives from its manufacturer a single and unique address (called MAC address ). When a computer connects to an Internet or intranet server, it receives a network address . As the IP addresses are quite difficult to remember, a domain name is created for important servers (like web or FTP servers) . A hierarchy of systems have been created to locate a domain name. It is called DNS . The intelligence behind the DNS servers is provided by the open source program BIND, and soon the "Domain Wars" started to decide who would control the domains. The conflicts have been solved by a policy specifying the codification of roles in the operation of a domain name space: § Registrant - The entity that makes use of the domain name. § Registrar - The agent that submits change requests to the registry on behalf of the registrant. § Registry - The organization that has edit control of the name space's database. One

non-profit organization has been created, ICANN , which the role is

to oversee administer Internet resources including Addresses (by delegating

blocks of addresses to the regional registries), Protocol identifiers

and parameters (by allocating port numbers, etc.) and Names. 2.3.3. The Internet · History The Internet is the result of many technologies developing in parallel, around the Advanced Research Projects Agency (ARPA, today called DARPA ) of the U.S. Department of Defense. Motivated by the launching of the first sputnik, ARPA started to design a communications system invulnerable to nuclear attack. It was the ARPANET project, based on packet switching communication technology. "The system made the network independent of command and control centers, so that message units would find their own routes along the network, being reassembled in coherent meaning at any point in the network" . Important is to notice that, since the beginning, the concept around the network had stronger cultural and social than technical motivations: "The ARPA theme is that the promise offered by the computer as a communication medium between people, dwarfs into relative insignificance the historical beginnings of the computer as an arithmetic engine." J.C.R. Licklider perceived the spirit of community created among the users of the first time-sharing systems. He envisioned an "'Intergalactic Network", formed by academic computer centers. His ideas took almost one decade to become reality: On October 25, 1969 a host-to-host connection has been successfully established between the University of California Los Angeles (UCLA) and the Stanford Research Institute (SRI), creating the network roots for the Internet. In the same time - as we already discussed - UNIX was born and the Internet Protocols have been defined. In 1973, 25 computers were connected using the ARPANET structure , opened to the research centers cooperating with the US defense Department. Other networks - using the ARPANET as the backbone communication system - started to appear, aimed to connect scientists from all disciplines, military institutions. This network of networks, formed during the 1980s, was called ARPA-INTERNET, then simply INTERNET, still supported by the Defense Department and operated by the National Science Foundation. In 1995, motivated by commercial pressures, the growth of private corporate networks, and of non-profit, cooperative networks, the Internet was privatized. About 100,000 computer networks were interconnected around the world in 1996, with roughly 10 million computers users. The internet has posted the fastest rate of penetration of any communication medium in history: in the United States, the radio took 30 years to reach 60 million people; TV reached this level of diffusion in 15 years; the Internet did it in just three years after the development of world wide web. · The World Wide Web In addition to being a means of communicating via e-mail, the Internet has become a means of propagating information via hypertext documents. Hypertext documents contain specific words, phrases, or images that are linked to other documents. A reader of a hypertext document can access these related documents as desired, usually by pointing and clicking with the mouse or using the arrow keys on the keyboard. In this manner, a reader of hypertext documents can explore related documents or follow a train of thought from document to document, in the intertwined web of related information. When implemented on a network, the documents within such a web can reside in different machines, forming a network-wide web. Similarly, the web that has evolved on the Internet spans the entire globe and is known as World Wide Web. · Standard Bodies A wide variety of organizations contribute to internetworking standards by providing forums for discussion, turning informal discussion into formal specifications, and proliferating specifications after they are standardized. Most standards organizations create formal standards by using specific processes: organizing ideas, discussing the approach, developing draft standards, voting on all or certain aspects of the standards, and then formally releasing the completed standard to the public. They are normally independent, aimed to stimulate a healthy competition, but they are often influenced by companies and lobbies. · The Internet Standardization Some of the organizations that contributed to the foundation of Internet with internetworking standards may be found in the chapter Appendix B. The heart of Internet standardization is the IETF, which coordinate the developments in working groups. According to Scott Bradner, co-director of the Transport Area in the IETF, "IETF working groups created the routing, management, and transport standards without which the Internet would nor exist. They have defined the security standards that will help secure the Internet, the quality of service standards that will make the Internet a more predictable environment, and the standard for the next generation of the Internet protocol itself. (…) There is enough enthusiasm and expertise to make the working group a success" . Apart from TCP/IP itself, all of the basic technology of the Internet was developed or refined in the IETF. Resulting from the working groups are the base documents in the standardization process, known as RFC (Request For Comment) . The RFC series of documents on networking began in 1969 as part of the original ARPANET project. Initially they were submitted for comments (as the name indicates) but, after the Internet has been developed, they ave generally gone through an extensive review process before publication. In the end, they become proposals for standards (and following the standard track possibly become Draft Standards and Internet Standards), best current practice guides, informational guidelines, etc. They are freely available on the Internet and may be republished in its entirety by anyone. Interesting examples of RFC are the 2026 , which recursively describes the Internet standards process and the 3160 , known as "The TAO of IETF". The proposed standards are analysed by the IAB, which may use them to create the final standards. The research is done by the IRTF. Also important are the IANA - responsible for names, domains and codes - and the W3C - which coordinates the work over the standards related to the Web, like the HTTP protocol and the HTML language . The web standards have three different stages. The first is the draft, where the proposals are made public and are open to large-scale reviews and change. After they have been available for a certain time, the draft moves to the second stage, known as Proposed recommendation. During a 6-week period, members of the W3C vote to decide upon the adoption of the proposal as the final standard (either fully or with minor changes). If the standard is rejected, it may return to draft status for further modifications and consultation . 2.3.4. Trend: Open Spectrum A radical idea, open spectrum can transform the communications landscape as profoundly as the Internet ever did, by suppressing the telephone, cable and Net access fees. The idea is that smart devices cooperating with one another function more effectively than huge proprietary communication networks, by treating the airwaves as commons, shared by all. In an open spectrum world, wireless transmitters would be as ubiquitous as microprocessors (in televisions, cars, public spaces, handheld devices) and tune themselves to free spectrum and self-assemble into networks. If the Internet revolution started with the Arpanet, the wireless paradigm is happening via Wi-Fi, protocol that uses a narrow slice of spectrum that is already open. When spectrum licensing was established in the early 20th century, radios were primitive, as was the regulatory model used to govern them. Broadcasters needed an exclusive slice of the spectrum. Today digital technologies let many users occupy the same frequency at the same time. In 2004 half of the laptops used at work are expected to have wireless connections, and in 2006 Intel hopes to incorporate transmitters into all of its processor chips. 2.4. Open Trends The current trend is the increase the level of Hardware and Software Independence, transforming IT into a commodity. · Applications dependent on the hardware Initially the customer was entirely tied to the hardware supplier. All the software was built in machine code (or in low-level languages like Assembler), different for each machine, even from the same supplier. This incompatibility brought several problems, when these machines needed to be changed or simply upgraded to a larger model: § Compatibility - All the code should be reviewed, and sometimes completely rebuilt. Some of the code could be bought from the hardware supplier (mainly for the basic software tools), but the main part was developed internally. § Migration tasks - The formats and protocols used to store the data were also different among the hardware platforms. To transfer the data, conversion procedures needed to be written. § Specialization - The persons should be trained to learn the new platform. This also motivated some people to change their jobs to keep their technological knowledge and specializations.

The hardware suppliers started to create architectures (as the case for IBM S/360, analysed on page 21), to reduce their development tasks, cut maintenance costs, and simplify the migration tasks among the different machines using the same architecture. Nevertheless, the customer was still tied to the hardware manufacturer. To change the hardware platform, the operating system need also to be changed, implying a redesign of the application and the change of the bridges between the system and user interfaces. In most of the cases, the complete application is rebuilt, to better use the facilities from the new operating system. As an example, this is what occurs in downsizing process, in companies migrating from mainframe applications to smaller platforms. This may take more than a decade to be fully completed.

The development of open systems platforms - like UNIX - freed the customer from the hardware supplier. After the creation of The Single UNIX Specification (see page 27), the portability of the applications is paramount. However, the customer is still tied to the extensions to the base platform provided by the supplier. To change the hardware supplier or platform, the usage of the hardware extensions and software specificities should be checked and rebuilt. To avoid this, the usage of these "extra-features" should be reduced to a minimum and well documented. Many manufacturers use the tie between their hardware and software to distinguish their systems, while other companies treat hardware and software as separate businesses.

To reduce the efforts of maintaining different hardware platforms, while keeping the development of operating systems that satisfy different customers requirements, some companies started to standardize the hardware platforms, keeping it compatible with different operating systems. It was the case of IBM, initially within the mainframes family, where one line of computers (zSeries) is compatible with three operating system families (zOs, zVM, zVSE). Recently, with IBM concentrating their revenues in the services rather than in the software or machines, all lines of IBM computers (Series Z, X, I and P ) are compatible with Linux. Now customers can even add Linux and Open source applications to IBM UNIX machines (pSeries), co-existing with the IBM UNIX (AIX5L) operating system.

Originally, the programming process was accomplished by the arduous method of requiring the programmer to express all algorithms in the machine's language (expressed in binary digits). The first step toward removing these complexities from the programming process was the creation of assembly languages (also called second-generation languages), which replaced numeric digits by mnemonics. The evolution continued with the third-generation languages (e.g. COBOL, C), which used statements closer to the human language, traduced into the machine language by compilers. With the development of third-generation languages, the goal of machine independence (discussed previously in this document) was largely achieved. Since the statements did not refer to the attributes of any particular machine, they could be compiled as easily for one machine as for another . The evolution continued, with the implementation of software packages that allow users to customize computer software to their applications without needing hardware, operating systems, or programming languages expertise. Some of those packages are so complex, however, that need the participation of business specialists, with an advanced knowledge of the packages, for the implementation of business functions . On the chapter 3, we will se how open platforms like CORBA and Java can be used to achieve a maximum independence over the third-level languages, without the inconvenience of the software packages. Still on the cradle is the usage of declarative programming (sometimes referred to as fifth-generation languages or logic programming), for expert systems and artificial intelligence applications. · The informational commodities: Hardware, Software, Applications In the 1960s, the computers resources were so expensive that they were shared among different companies, who bought slots of "CPU time". It is a concept known as "Time Sharing", and it started the vision of hardware as a commodity. The company does not need to buy a computer, install it, or have a special team to maintain it. Computer systems are simply used, and the fees are either fixed (based on the resources available) or based in the usage of the machines. Nowadays, the evolution of this concept is the usage of applications as commodities. With the good performance and the relatively low price of the network communications, it's possible to run a system completely separated from the company. Moreover, with the advanced level of hardware and system independence, the only compatibility to be considered is in the application level. That's the era of ISP (Internet Service Providers), which take care of the internet, e-mail and part of the network infrastructure, and the ASP (Application Service Providers), which supply the access to the application, freeing the company of most of the concerns related to the information and communication technologies (ICT). Companies can run complete applications, hosted by ASPs and available via the Web. The network infrastructure can be rented from a third-party, also responsible for its maintenance. Network computers can be leased from a specialized company, which may offer the technical support. In this case, computing power is a facility at almost the same level than electricity and water.

The different hardware platforms will probably continue to broaden their initial scope, and possibly start to fusion. The division lines among them will finally disappear, each computer being able to run several different operating systems, in parallel. The open platforms are the key for this to happen, with the proprietary hardware being increasingly compliant with them, assimilating open architectures definitions. The end of the windows monopoly will mark the next decade. However, nobody needs another dominant operating system. The existing software platforms need to co-exist, to allow the companies and users to have choice, with the competition helping to increase the quality. The choice must be based on real business and personal needs; marketing will continue to blur the technical advantages, and to reduce this negative effect, information must be simplified and well focused to the public. Most of the Internet tools have been developed through the open-source process. Apache - The Open source web server - still dominates the market . What are the advantages of open source technology over proprietary products, for a worldwide network? One

answer is communication. The Internet is based in open standards,

which were always successful to perform the connection of different

hardware and software platforms. The communication was always possible

and often perfect. Compatibility problems started to appear with proprietary

extensions to the standards (like the HTML extensions for Netscape

and Internet Explorer). A third answer is probably "too" clear: the openness of the source code. Early web sites - using technologies like HTML and Javascript - were easily copied, altered and published again in another part of the world. This made the creation of personal web sites easy, and allowed a quick "learning by example". The implementation of open source technologies like CGI scripts and PHP - which "hide" the source from the page - has been helped by the hacker community, which created sites with several examples of source code, which can be freely used, without royalties. The online communities maintained by commercial companies behind proprietary solutions can help to reduce this gap. |