3. Open Internet Development

Most

of the well designed Internet applications are developed under object-oriented

methodologies , to better cope with the modular structures of HTML

and XML, and to more effectively reuse or even share programming modules

with other companies. The applications using object-oriented languages

are often designed using a formal methodology and tightly related

to modelling languages.

In

this chapter, we will analyse the development of open methodologies

for application design, which can be extremely helpful to ensure scalability,

security and robust execution of Web applications under stressful

conditions. After, we will see three different platforms used to develop

the web applications, and discuss the importance of open standards

to build web services.

We

cannot finish without analysing the current hype around agile development

and extreme programming. To conclude, we will review some ideas about

comparison elements between open and proprietary platforms.

3.1.

Design

Modelling

is the designing of software applications before coding, being an

essential part of large software projects, and helpful to medium and

small projects. Using a model, those responsible for a software development

project's success can assure themselves that business functionality

is complete and correct, end-user needs are met, and program design

supports requirements for scalability, robustness, security, extendibility,

and other characteristics, before implementation in code renders changes

difficult and expensive to make.

3.1.1.

MDA

The

OMG Model Drive Architecture (MDA) provides an open, vendor-neutral

approach to the challenge of business and technology change. Based

firmly upon other open standards, MDA aims to separate business or

application logic from underlying platform technology. The platform-independent

models enable intellectual property to move away from technology-specific

code, helping to foster application interoperability.

·

Specification

In

September 2001, OMG members completed the series of votes that established

the MDA as the base architecture for the organization's standards.

Every MDA standard or application is based, normatively, on a Platform-Independent

Model (PIM), which represents its business functionality and behaviour

very precisely but does not include technical aspects. From the PIM,

MDA-enabled development tools follow OMG-standardized mappings to

produce one or more Platform-Specific Models (PSM): one for each target

platform that the developer chooses.

The

PSM contains the same information as an implementation, but in the

form of a UML model instead of running code. In the next step, the

tool generates the running code from the PSM, along with other necessary

files. After giving the developer an opportunity to hand-tune the

generated code, the tool produces a deployable final application.

MDA

applications are composable: If PIMs are imported for modules, services,

or other MDA applications into the development tool, it can generate

calls using whatever interfaces and protocols are required, even if

these run cross-platform. MDA applications are "future-proof":

When new infrastructure technologies come on the market, OMG members

will generate and standardize a mapping to it, and the vendors will

upgrade his MDA-enabled tool to include it. Taking advantage of these

developments, cross-platform invocations can be generated to the new

platform, and even port the existing MDA applications to it, automatically

using the existing PIMs. Although MDA can target every platform and

will map to all with significant market buy-in, CORBA plays a key

role as a target platform because of its programming language-, operating

system-, and vendor-independence. The mapping from a PIM to CORBA

has already been adopted as an OMG standard.

·

The core

Figure 16 - Model Driven Architecture

§

The Unified Modeling Language (UML)

As discussed, each MDA specification will have, as its normative base,

two levels of models: a Platform-Independent Model (PIM), and one

or more Platform-Specific Models (PSM). These will be defined in UML,

making OMG's standard modeling language the foundation of the MDA.

UML is discussed separated in the next chapter.

§

The Meta-Object Facility (MOF)

By defining the common meta-model for all of OMG's modeling specifications,

the MOF allows derived specifications to work together in a natural

way. The MOF also defines a standard repository for meta-models and,

therefore, models (since a meta-model is just a special case of a

model).

§

Common Warehouse MetaModel (CWM)

The CWM standardizes a complete, comprehensive metamodel that enables

data mining across database boundaries at an enterprise and goes well

beyond. Like a UML profile but in data space instead of application

space, it forms the MDA mapping to database schemas. The product of

a cooperative effort between OMG and the Meta-Data Coalition (MDC),

the CWM does for data modelling what UML does for application modelling.

3.1.2.

CORBA

CORBA is the acronym for Common Object Request Broker Architecture,

OMG's open, vendor-independent architecture and infrastructure that

computer applications use to work together over networks. Using the

standard protocol IIOP, a CORBA-based program from any vendor, on

almost any computer, operating system, programming language, and network,

can interoperate with a CORBA-based program from the same or another

vendor, on almost any other computer, operating system, programming

language, and network. CORBA is useful in many situations because

of the easy way that CORBA integrates desktop and servers from so

many vendors.

· Technical overview

In

CORBA, client and object may be written in different programming languages.

CORBA applications are composed of objects . For each object type,

an interface is defined in OMG IDL (Interface Definition Language)

. The interface is the syntax part of the contract that the server

object offers to the clients that invoke it. Any client that wants

to invoke an operation on the object must use this IDL interface to

specify the operation it wants to perform, and to order the arguments

that it sends. When the invocation reaches the target object, the

same interface definition is used there to parse the arguments and

perform the requested operation.

This

separation of interface from implementation is the essence of CORBA.

The interface to each object is defined very strictly. In contrast,

the implementation of an object - its running code, and its data -

is hidden from the rest of the system (that is, encapsulated). Clients

access objects only through their advertised interface, invoking only

those operations that that the object exposes through its IDL interface,

with only those parameters (input and output) that are included in

the invocation.

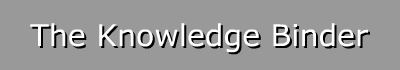

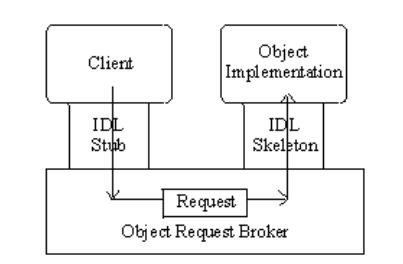

Figure 17 - CORBA request from client to object

The

above figure shows how everything fits together, at least within a

single process: The IDL is compiled into client stubs and object skeletons

. Passing through the stub on the client side, the invocation continues

through the ORB (Object Request Broker) , and the skeleton on the

implementation side, to get to the object where it is executed. Because

IDL defines interfaces so strictly, the stub on the client side matches

perfectly with the skeleton on the server side, even if the two are

compiled into different programming languages, or even running on

different ORBs from different vendors.

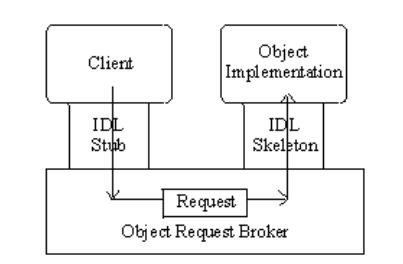

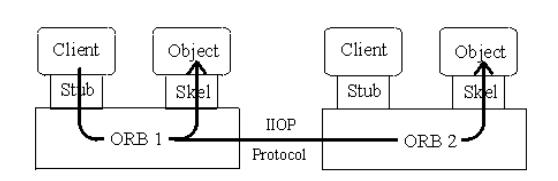

Figure 18 - CORBA remote invocation flow using ORB-to-ORB communication

The

figure above diagrams a remote invocation. In order to invoke the

remote object instance, the client first obtains its object reference.

To make the remote invocation, the client uses the same code that

it used in the local invocation we just described, substituting the

object reference for the remote instance. When the ORB examines the

object reference and discovers that the target object is remote, it

routes the invocation out over the network to the remote object's

ORB. Although the ORB can tell from the object reference that the

target object is remote, the client cannot. This ensures location

transparency - the CORBA principle that simplifies the design of distributed

object computing applications.

3.1.3.

UML

The Unified Modelling Language (UML) is a open method for specifying,

visualizing, constructing, and documenting the artefacts of software

systems, as well as for business modelling and other non-software

systems. It represents a collection of the best engineering practices

that have proven successful in the modelling of large and complex

systems, addressing the needs of user and scientific communities.

· Medieval ages

Identifiable

object-oriented modelling languages began to appear between mid-1970

and the late 1980s as various methodologists experimented with different

approaches to object-oriented analysis and design. Several other techniques

influenced these languages, including Entity-Relationship modelling

and the Specification & Description Language. These early methods

could not satisfy most of the design requirements, and started to

incorporate each other's techniques. A few clearly prominent methods

emerged, including the OOSE, OMT-2, and Booch'93 methods. Each of

these was a complete method, and was recognized as having certain

strengths. In simple terms, OOSE was a use-case oriented approach

that provided excellent support business engineering and requirements

analysis. OMT-2 was especially expressive for analysis and data-intensive

information systems. Booch'93 was particularly expressive during design

and construction phases of projects and popular for engineering-intensive

applications.

·

All for one

The

UML started out as collaboration among three outstanding methodologists:

Grady Booch (Boock'93), Ivar Jacobson (OOSE), and James Rumbaugh (OMT-2).

At first Booch and Rumbaugh sought to unify their methods with the

Unified Method v. 0.8 in 1995; a year later Jacobson joined them to

collaborate on the slightly less ambitious task of unifying their

modelling languages with UML 0.9.

The

user community quickly recognized the advantages of a common modelling

language that could be used to visualize, specify, construct and document

the artefacts of a software system. They enthusiastically applied

early drafts of the language to diverse domains ranging from finance

and health to telecommunications and aerospace. Driven by strong user

demand, the modelling tool vendors soon included UML support in their

products.

·

One for all

At

the same time that UML was becoming a de facto industry standard,

an international team of modelling experts assumed the responsibility

to make the language a formal standard as well. The "UML Partners",

representing a diverse mix of vendors and system integrators, began

working with the three methodologists in 1996 to propose UML as the

standard modelling language for the OMG. The Partners organized themselves

into a software development team that followed a disciplined process.

Since the process was based on an iterative and incremental development

life cycle, the team produced frequent "builds" and draft

releases of the specification.

The

Partners tendered their initial UML proposal to the OMG (UML 1.0)

in January 1997. After nine months of intensive improvements to the

specification, they submitted their final proposal (UML 1.1) in September

1997, which the OMG officially adopted as its object-modelling standard

in November 1997. UML 1.5 is the current specification adopted by

the OMG, and in mid-2001, OMG members started work on a major upgrade

to UML 2.0. Four separate RFPs - for UML Infrastructure, UML Superstructure,

Object Constraint Language, and UML Diagram Interchange - keep the

effort organized.

The

OMG defines object management as software development that models

the real world through representation of "objects." These

objects are the encapsulation of the attributes, relationships and

methods of software identifiable program components. A key benefit

of an object-oriented system is its ability to expand in functionality

by extending existing components and adding new objects to the system.

Object management results in faster application development, easier

maintenance, enormous scalability and reusable software.

OMG

members are preparing to standardize a Human-Usable Textual Notation

(HUTN) for UML models, or at least those that fit into the Enterprise

Distributed Object Computing (EDOC) UML Profile.

·

(Almost) Ten Years Later

UML

defines twelve types of diagrams, divided into three categories:

§ Structural Diagrams represent static application structure

and include the Class Diagram, Object Diagram, Component Diagram,

and Deployment Diagram.

§

Behavior Diagrams represent different aspects of the application's

dynamic behavior and include the Use Case Diagram (used by some methodologies

during requirements gathering); Sequence Diagram, Activity Diagram,

Collaboration Diagram, and Statechart Diagram.

§

Model Management Diagrams represent ways the applications modules

can be organized and managed and include Packages, Subsystems, and

Models.

The

Advanced UML Features add to the expressiveness of UML:

§

Object Constraint Language (OCL) has been part of UML since the beginning

and express conditions on an invocation in a formally defined way:

invariants, preconditions, post conditions, whether an object reference

is allowed to be null, and some other restrictions using OCL. The

MDA relies on OCL to add a necessary level of detail to PIMs and PSMs.

§

Action Semantics UML Extensions are a recent addition and express

actions as UML objects. An Action object may take a set of inputs

and transform it into a set of outputs, or may change the state of

the system, or both. Actions may be chained, with one Action's outputs

being another Action's inputs. Actions are assumed to occur independently

- that is, there is infinite concurrency in the system, unless you

chain them or specify this in another way. This concurrency model

is a natural fit to the distributed execution environment of Internet

applications.

UML

Profiles tailor the language to particular areas of computing or particular

platforms. In the MDA, both PIMs and PSMs will be defined using UML

profiles; eventually OMG will define a suite of profiles that span

the entire scope of MDA. Examples of three supporting UML Profiles

and one specialized profile are :

§

The UML Profile for CORBA defines the mapping from a PIM to a CORBA-specific

PSM.

§

The UML Profile for EDOC is used to build PIMs of enterprise applications.

It defines representations for entities, events, process, relationships,

patterns, and Enterprise Collaboration Architecture.

§

The UML Profile for EAI defines a profile for loosely coupled systems

. These modes are typically used in Enterprise Application Integration,

but are used elsewhere as well.

§

A UML Profile for Schedulability, performance, and time supports precise

modelling of predictable systems, precisely enough to enable quantitative

analysis.

· Opening the iron mask

UML

is clearly an open methodology, and the three main characteristics

are the independency of the technical infrastructure, the independency

of the methodology and the openness of its definition, managed by

a recognised and independent organisation (OMG).

At

first, UML can be used to model any type of application, running on

different combinations of hardware and software platforms, programming

languages and network protocols. Built upon the MOF metamodel which

defines class and operation as fundamental concepts, UML is a natural

fit for object-oriented languages and environments like C++, C#, Java

and Python. Some UML tools analyse existing source code and reverse-engineer

it into a series of UML diagrams. Other tools execute UML models in

interpretative way (to validate the design) and other may even generate

program language code from UML.

The

process of gathering and analysing an application's requirements,

and incorporating them into a program design, is a complex one and

the industry currently supports many methodologies that define formal

procedures specifying how to go about it. The second characteristic

of UML is that it is methodology-independent. Regardless of the methodology

used to perform the analysis and design, UML can express the results.

Using XMI (XML Metadata Interchange, another OMG standard), the UML

model can be transferred from one tool into a repository, or into

another tool for refinement or the next step in the chosen development

process.

At

last, the UML definitions are openly discussed by an independent organisation

- OMG, freely published in the Web and implemented by any software

vendor, including several open source projects.

The

OMG is structured into three major bodies, the Platform Technology

Committee (PTC), the Domain Technology Committee (DTC) and the Architecture

Board. The consistency and technical integrity of work produced in

the PTC and DTC is managed by an overarching Architectural Board.

Within the Technology Committees and Architectural Board rest all

of the Task Forces, SIGs, and Working Groups that drive the technology

adoption process of the OMG.

There

are three major methods of influencing the OMG process, in addition

to the impact of general review, commentary and open discussion. The

first is the ability to vote on work items or adoptions in the Task

Forces that are ultimately reviewed and voted on at the Technology

Committee level. The second is the ability to vote on work items or

adoptions at one or both of the Technology Committee levels. The third

is the ability to actually submit technology for adoption at one or

both of the Technology Committee levels. Membership fees are based

on these levels of influence.

3.2.

Web Platforms

3.2.1. Java

The Java platform is based on the power of networks. Since its initial

commercial release in 1995, Java technology has grown in popularity

and usage because of its true portability. Java is a general-purpose

concurrent object-oriented programming language. Its syntax is similar

to C and C++, but it omits many of the features that make C and C++

complex, confusing, and unsafe. Java was initially developed to address

the problems of building software for networked consumer devices.

It was designed to support multiple host architectures and to allow

secure delivery of software components. To meet these requirements,

compiled Java code had to survive transport across networks, operate

on any client, and assure the client that it was safe to run.

·

Java platform

A

platform is the hardware or software environment in which a program

runs. We've already mentioned some of the most popular platforms in

the chapter 2. Most platforms can be described as a combination of

the operating system and hardware. However, Java is a software-only

platform that runs on top of other hardware-based platforms.

The

Java platform was designed to run programs securely on networks and

allows the same Java application to run on many different kinds of

computers. For example, PersonalJava applications power home appliances,

Java Card applications run on smart cards, smart rings, and other

devices with limited memory, and Java TV applications run in television

settop boxes. This interoperability is guaranteed by a component of

the platform called the Java virtual machine (or "JVM")

- a kind of interpreter that turns general Java platform instructions

into tailored commands that make the devices do their work.

Figure 19 - Java platform components

The

other component - Java API - is a large collection of ready-made software

components that provide many useful capabilities, such as graphical

user interface (GUI) widgets. The Java API is grouped into libraries

of related classes and interfaces; these libraries are known as packages.

The

power of compiled languages is the execution speed. The power of interpreted

languages is the flexibility to run a same program in different platforms.

The Java programming language is unusually powerful in that a program

is both compiled and interpreted. With the compiler, first you translate

a program into an intermediate language called Java bytecodes -the

platform-independent codes interpreted by the interpreter on the Java

platform. The interpreter parses and runs each Java bytecode instruction

on the computer. Compilation happens just once; interpretation occurs

each time the program is executed.

Figure 20 - Java compiler and interpreter

·

Java editions

Three

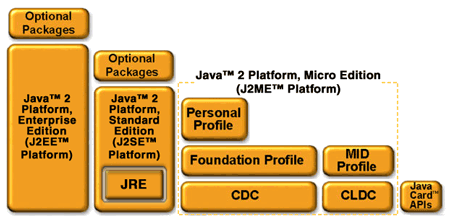

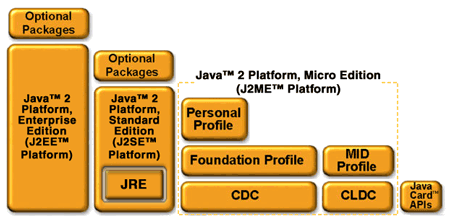

editions group the different technologies according to the hardware

platform: J2ME (tiny commodities, like smartcards), J2SE (development

of applets and applications) and J2EE (enterprise server-side applications).

Figure

21 - The Java platform and editions

Java

is owned by Sun Microsystems, which has in place the appropriate mechanisms

to meet the evolving needs of the industry, and to fulfil the needs

of Internet application developers .

·

Java buzzwords

In

the scope of this document, let us analyze the two main Java characteristics

in detail, and briefly describe the others:

§

Architecture neutral

The

independence of the architecture is fundamental to allow the same

program to run virtually anywhere, without special adaptation tasks.

For Java, this is guaranteed by the Java platform components seen

above. This is seen as a menace by companies like Microsoft, which

tried to block the compatibility of the JVM with recent Windows versions.

This was a strategy to force the Windows customers to use the Microsoft

platform - .NET - and luckily has been avoided by the justice.

§

Portable

Portability

is closely related to the architecture independence. It describes

the characteristics of the language that must remain unchanged independently

of the platform, like data types (e.g. character, byte, integer).

Even if this may seem simple at first sight, this is not true for

most of the languages, in which one of the activities when porting

a program from one platform to another is the adaptation of the data

types. Java did not make this mistake. The data types have specific,

defined lengths regardless of the system.

The

Java virtual machine is based primarily on the POSIX interface specification

- an industry-standard definition of a portable system interface.

Implementing the Java virtual machine on new architectures is a relatively

straightforward task.

§

Simple - Reduces software development cost and time to delivery

§

Object oriented - To function within increasingly complex, network-based

environments, programming systems must adopt object-oriented concepts

§

High performance - Obtained from the compiled bytecode

§

Interpreted - The bytecode is transformed into executable code during

the execution of the program

§

Multithreaded - Multiple tasks can be executed in parallel, improving

overall system performance

§

Robust - Provides a solid, reliable environment in which to develop

software

§

Dynamic - Classes are linked only as needed. New code modules can

be linked in on demand from a variety of sources, even from sources

across a network.

·

Applets

Sun's

HotJava browser showcases Java's interesting properties by making

it possible to embed Java programs inside HTML pages. These programs,

known as applets, are transparently downloaded into the HotJava browser

along with the HTML pages in which they appear. Before being accepted

by the browser, applets are carefully checked to make sure they are

safe. Like HTML pages, compiled Java programs are network and platform-independent.

Applets behave the same way regardless of where they come from, or

what kind of machine they are being loaded into and run on.

With

Java as the extension language, a Web browser is no longer limited

to a fixed set of capabilities. Programmers can write an applet once

and it will run on any machine, anywhere. Visitors to Java-powered

Web pages can use content found there with confidence that it will

not damage their machine.

·

Open Belgian Cathedrals

According

to Tony Mary , openness and flexibility are the main Java advantages.

This openness could be widened further, with a creation of a unique

media technology by a group of companies. This could be compared -

accorded to him - to the cathedrals. In opposition to the medieval

castles - strongly protected and closed, and almost destroyed today

- the Cathedrals always remained open and survived.

According

to Edy Van Asch , Open Source is the main Java trend. Java has always

been closely related to Open Source, but since 2001 this relationship

became stronger. Examples are Eclipse (Open Source Java Development

environment), Junit (Open Source Test Structures), Jboss (Open Source

Application Server) and Jini.

Jini

network technology is an open architecture that enables developers

to create network-centric services - whether implemented in hardware

or software - that are highly adaptive to change. Jini technology

can be used to build adaptive networks that are scalable, evolvable

and flexible as typically required in dynamic computing environments.

Jini Offers an open development environment for creative collaboration

through the Jini Community, and is available free of charge with an

evergreen license. It extends the Java programming model to the network

(by moving data and executables via a Java object over a network)

and enables network self-healing and self-configuration.

3.2.2.

.NET

.NET (read as "dot-net") is both a business strategy from

Microsoft and its collection of proprietary programming support for

the Web services . Its goal is to provide individual and business

users with a seamlessly interoperable and Web-enabled interface for

applications and computing devices and to make computing activities

increasingly Web browser-oriented. The .NET platform includes servers

(running Microsoft Windows), building-block services (such as Web-based

data storage), device software and Passport (Microsoft's identity

verification service). Functionally the .Net architecture can be compared

to the Java platform. In the scope of our study, they are completely

different: Java aims to give the companies and users the liberty of

choosing the hardware and software platforms. .NET is tightly related

to Microsoft Windows operating systems (the only software platform

supported) and favours the usage of complementary Microsoft products

such as Internet explorer and the Office suite. Omnipresence is the

goal, and the weapon is the relative complexity of Java platform.

The

full release of .NET is expected to take several years to complete,

with intermittent releases of products such as a personal security

service and new versions of Windows and Office that implement the

.NET strategy coming on the market separately.

·

Dot objectives

According

to Bill Gates, Microsoft expects that .NET will have as significant

an effect on the computing world as the introduction of Windows. One

concern being voiced is that although .NET's services will be accessible

through any browser, they are likely to function more fully on products

designed to work with .NET code.

§

The ability to make the entire range of computing devices work together

and to have user information automatically updated and synchronized

on all of them

§ Increased interactive capability for Web sites, enabled by

greater use of XML rather than HTML

§ A premium online subscription service, that will feature customized

access and delivery of products and services to the user from a central

starting point for the management of various applications, such as

e-mail, for example, or software, such as Office .NET

§ Centralized data storage, which will increase efficiency and

ease of access to information, as well as synchronization of information

among users and devices

§ The ability to integrate various communications media, such

as e-mail, faxes, and telephones

§ For developers, the ability to create reusable modules, which

should increase productivity and reduce the number of programming

errors

·

Dot components

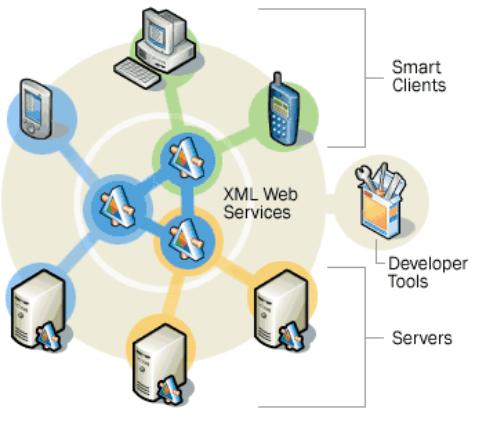

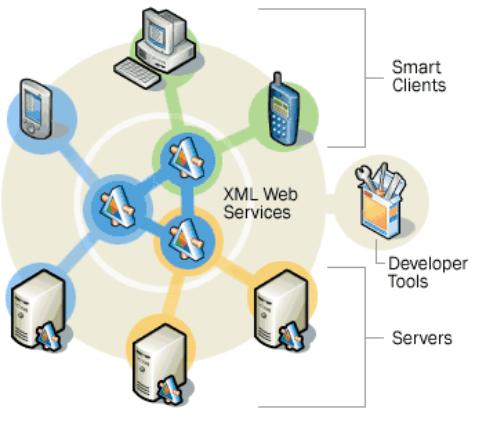

Figure 22 - The Components of Microsoft .NET-Connected Software

§

Smart clients: "Smart" is the term used by Microsoft for

client computers using one of its proprietary operating systems: Microsoft

Windows XP, Windows XP Embedded, and Windows CE .NET. All other clients

platforms are excluded from the .NET architecture.

§

XML Web services let applications share data, and invoke capabilities

from other applications without regard to how those applications were

built, what operating system or platform they run on, and what devices

are used to access them. While XML Web services remain independent

of each other, they can loosely link themselves into a collaborating

group that performs a particular task.

§

Developer Tools - Microsoft Visual Studio .NET (with high-level programming

languages like Visual Basic, Visual C++ and C#) and the Microsoft

.NET Framework (The application execution environment, which include

components like the common language runtime, a set of class libraries

for building XML Web services, and Microsoft ASP.NET) aim to supply

a complete solution for developers to build, deploy, and run XML Web

services.

§

Servers - In opposite to the portability of Java applications, only

servers running Microsoft operating systems (Windows 2000 Server,

Windows Server 2003, and the .NET Enterprise Servers) are certified

to fully obtain good results from the .NET platform.

·

Dot integration

Microsoft

considers XML "deceptively simple" , and would certainly

prefer a complex and proprietary solution to integrate the .NET platform

- composed by Microsoft operating systems and basic software - with

other vendors environments. XML is revolutionizing how applications

talk to other applications - or more broadly, how computers talk to

other computers - by providing a universal data format that lets data

be easily adapted or transformed. Therefore, Microsoft saw in XML

an opportunity to connect its completely proprietary platform with

other proprietary or open environments. As an example, XML is extensively

used by Microsoft Host Integration Server 2000 to provide application,

data, and network integration between .NET platforms and host systems

(Mainframe and AS/400 environments, considered "legacy"

by Microsoft, and estimated to contain 70 percent of all corporate

data).

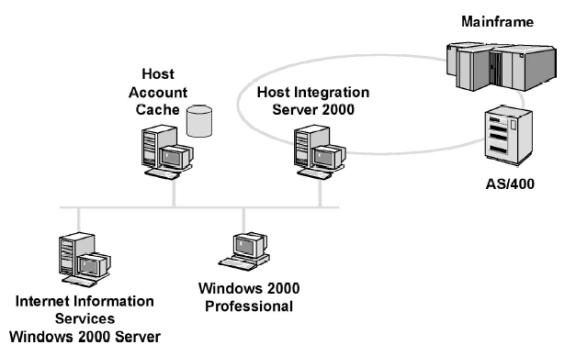

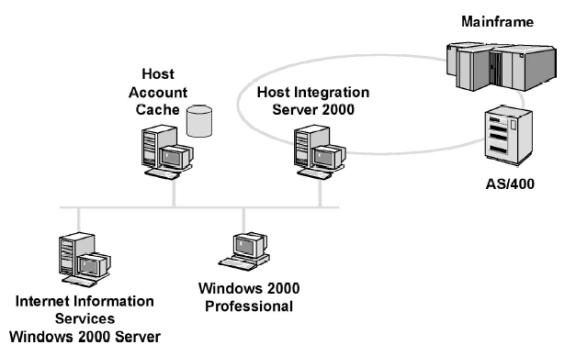

Figure 23 - Microsoft Host Integration Server

3.2.3.

Java.NET

The

comparison between Java and .net is not only technical. Economical

and cultural aspects are far more important. In this chapter, we will

analyse some of the differences and exploit possible alternatives.

·

Co-existence

Considering

the easy integration of both .NET and Java platforms, by the usage

of XML and Web services, many analysts suggest a co-existence of both

platforms in most companies. Scott Dietzen foresee this to happen

in the next five years. One of his arguments is the current .NET power

in the client-side and J2EE in the server-side. But he also prophesises

that the integration of Java to the companies culture, and its implementation

should become easier, before Microsoft .NET technology is mature enough

to attract large customers in projects where reliability is too important.

Nico Duerinck agrees with the co-existence to be possible, mainly

if Web services are implemented, even if a reduced number of companies

are currently using both technologies.

· Portability

For

new projects, Java may be clearly preferred to ensure the portability

of the applications. It guarantees that a server may start small,

running Windows operating systems, and move to other platforms like

Linux or UNIX to satisfy more advanced requirements like parallelism,

high-availability and reliability. An application developed under

.NET will always require a windows-based server to be able to exploit

all its capabilities.

Additionally,

as described by Dietzen, the Java community is composed by more than

100 vendors, working to improve the Java features and developing complementary

and concurrent solutions. The innovation resulted is far beyond what

a single company can afford.

·

Symbiosis

According

to David Chappell , Windows DNA (seed of .net) and Java appeared together

in 1996. Since then, J2EE was built using windows DNA interesting

concepts, and .net framework incorporated Java-like features. This

"mutual and cross-pollenization" helps innovation in both

senses and minimize the problem of "single source of creativity",

suggested by Dietzen.

·

Infrastructure Costs

According

to Duerinck, most of the companies will try to keep the existing infrastructures,

and will tend to implement .net platforms in windows systems and Java

for all the other architectures. It is often a simple financial decision.

If a Microsoft license exists, it will probably be kept and exploited.

The cost of .net framework in existing windows systems is very low.

Some Java application servers can be rather expensive, however cheap

and open source alternatives exist.

The

training costs and simplification of the hardware and operating systems

diversity is also an important point to be considered.

·

Complementary products

Products

are often developed by independent companies to be compatible with

both platforms. This is the case of Compuware development products

and Real Software development architecture . This can also minimize

the costs to transport an application from one platform to another,

by reducing the effects of .net proprietary philosophy.

3.2.4.

The outsiders: LAMP

The

term LAMP (Linux, Apache, MySQL, Perl / Python / PHP) refers to a

set of Open Source software tools that allow for the development and

deployment of web applications .

All

of the omponents of LAMP can be downloaded without any costs. The

support is done by a network of developers aggregated in online communities

like "OnLamp " to help each other get the most out of LAMP.

Training is available from a wide array of providers, and many consulting

firms offer advanced capabilities for those businesses that require

sophisticated LAMP development.

I

have used LAMP to develop my web site . All dynamic pages have been

developed in PHP, and the databases in MySQL. The website is hosted

by ALL2ALL on Linux and Apache servers. The usage of Open Source allows

the web hosting to be performed with low costs. The learning cost

was a book about PHP and MySQL complemented by a large amount of free

online information, from FAQS to complete systematic training. The

learning time was reduced by using the large amount of online examples.

Most of the sites using LAMP provide the sources used for their development.

§

Linux

See

chapter 2.2.3 for a large discussion on Linux.

§

Apache

The

most widely used web server software in the world , Apache can be

run on a variety of operating systems, including AIX, Digital UNIX,

FreeBSD, Irix, Mac OS X, Netware, OpenBSD, Solaris, SunOS, Windows,

and of course, Linux. Apache's security record is far better than

that of Microsoft IIS.

§

MySQL

This

database was built with the philosophy that a web database should

be lean and fast. It doesn't incorporate the diverse array of application

server features that Oracle and Microsoft database tools do, instead

focusing on core performance and leaving enhanced functions to scripting

languages. MySQL runs on a variety of operating systems, including

AIX, FreeBSD, Mac OS X, Solaris, Windows, and Linux. It is well known

for its reliability; while the recent "Slammer" worm that

crippled much of the Internet was spread through Microsoft SQL Server,

it did not affect MySQL databases.

§

Perl

This

scripting language was invented to help overworked system administrators.

It still performs that function admirably well, but is also often

used in web development for things like dynamic forms, server monitoring,

and database integration. Perl runs on a wide variety of operating

systems.

§

PHP

Developed

from the ground up as a Web developer's language, PHP is easy to use

and executes very quickly. Skilled developers can use PHP to build

everything from online forms to complex database-driven web applications.

PHP is extremely popular, runs on a variety of operating systems,

and is most often used in conjunction with MySQL.

One

of the most powerful PHP features is the extensive library of functions,

open to be expanded by volunteer work. It contains functions to address

any possible need, to communicate with a large range of databases,

and to read and write files in many different formats, like PDF. The

documentation is written by volunteer work and consists in examples

supplied by the community. For any developer trained in other languages

the learning by example process is extremely efficient.

§

Python

A

general-use high-level language, Python is often used to tie different

backend pieces together. It lends itself particularly well to Java/XML

integration and development of dynamic content frameworks. Python

runs on several operating systems.

§

Trends

To

give a large impulse to this initiative, Sun unveiled a dual-processor

server - LX50 - that runs the new Sun distribution of the Linux OS.

The server will run Intel x86 chips and will be available not only

with Linux but also with an Intel-enabled version of Sun's own Solaris

operating system. In a briefing with analysts and press, Sun's new

executive vice president of software, Jonathan Schwartz, further detailed

the vendor's Linux strategy. While Sun will keep pushing Solaris in

the data centre and J2EE for middle-tier business logic, it is making

a major bet on LAMP as the application environment of choice at the

edge of today's corporate infrastructures .

A

company called BD-X used LAMP as the basis for its web services strategy.

BD-X's chief technology officer Kevin Jarnot started prototyping a

wide-area content-creation environment that would allow BD-X to provide

XML-based financial content on demand for a wide variety of subscription

customers. "At first, our decision to use Open Source was to

build a prototype without a lot of cost," according to Jarnot.

But after his prototype was built, Jarnot found, "it would actually

be fine for [the] project's required performance and scalability."

BD-X

founders reasoned that if they could transcribe these calls, mark

them up into XML, and then offer them to business customers, they

might just have a viable business. In the end, Jarnot says that 60

percent of the reason BD-X ended up with an Open Source solution was

because of cost. Thirty percent, he says, was because "we were

doing some pretty bleeding edge stuff... if we had been using proprietary

software, it would have been a lot harder to do the integration."

Oh, and the last ten percent of why BD-X went with Open Source? "Coolness

factor," says Jarnot.

Jarnot

said he expected BD-X would eventually need to port the system to

a more production ready commercial environment like Solaris running

Macromedia's ColdFusion. But, Jarnot said, it turned out that his

Open Source prototyped version was good enough to take into live production.

Using the standard Open Source LAMP stack as a backend platform, they

also decided to use the Open Source ELVIN message-oriented middleware

to filter the transcriptions and deliver them to customers. On the

XML side, the developers used Jedit (Open Source Java text editor)

for XML markup and editing. Jarnot mentions quite a few options he

is considering for using J2EE and Microsoft .NET technologies in conjunction

with his Linux, PHP and Apache systems. What proves again the clear

advantages of the symbiosis theory, between commercial, proprietary

and open platforms.

3.3.

Web Services

According

to Brad Murphy , "by now, virtually every company is fully aware

and engaged in various stages of integrating the Internet into their

business model to help serve customers better. A key part of every

integration strategy is the use of Web services. The phenomenal growth

of Web services is made possible by open standards that are built

into the products that comprise the Web services infrastructure".

With

a standards based infrastructure, companies have the ability to use

the Web rather than your own computer for various services, linking

applications and conducting B2B or B2C commerce regardless of the

computing platforms and programming languages involved. In a way,

Web services are the natural evolution of the Internet - again all

based on open standards. At this point, Web services may seem straightforward,

but a critical issue companies face is choosing the best enterprise/strategic

development platform for creating and deploying these new interoperable

applications.

·

Open standards

Several

options are available, but the key thing to remember is that any Web

services development platform - by definition - must follow open standards

to be interoperable, be innovative with rich and robust functionality

and work properly in responding to and addressing the complex needs

of a typical enterprise organization. The W3C has working groups focused

on refining the SOAP 1.1 and WSDL 1.1 specifications, which should

improve things considerably. The XML Protocol Working Group is working

on SOAP 1.2 while the Web Services Description Working Group is creating

the WSDL 1.2 specification. Meanwhile, the IETF and OASIS are also

working on standardizing Web service specifications, including DIME

and WS-Security.

While

work at the W3C focuses on new versions of the core Web services specifications,

a separate organization is focusing more attention on interoperability.

The Web Services Interoperability Organization (WS-I) is focused on

defining best practices for ensuring Web service interoperability.

The WS-I Basic Profile Working Group is currently developing a set

of recommendations for how to use the core Web service protocols like

SOAP 1.1 and WSDL 1.1 to maximize interoperability.

The

clear leaders in the race to deliver Web services functionality are

IBM and the entire Java / J2EE vendor community. They recognized nearly

five years ago the need to support standards that provide customers

the flexibility of choice and as a result, devoted serious resources

to creating its solution. As discussed on chapter 3.2.2, the Microsoft

approach is very different when it comes to implementation. IBM and

the Java community are committed to a Web infrastructure based on

open standards - especially Java and Linux - to make disparate systems

work together. Microsoft presents .Net as an open platform that supports

Web standards, but it is still a highly proprietary technology that

runs solely on the Windows platform. Microsoft supports Web standards

interoperability - such as XML and SOAP - but only within the proprietary

Windows framework that is not easily portable.

·

Proprietary platforms

Locking-in

Web services to the Windows platform may be a perfectly acceptable

solution for a small or mid-tier business. However, for larger organizations,

this approach is not only impractical but also caries with it risks

and hidden costs when addressing the integration and collaboration

challenges of significant internal applications as well as a large,

dispersed organization. It's important to consider that almost every

large company has a rich, complex heterogeneous computing environment.

Another

critical factor to consider is that a commitment to only one operating

environment such as Windows means companies lose the ability to choose

and negotiate among Web service application vendors for the best function

and price. Will companies really want to marry their Web services

application development -including the possibility of a substantial

portion of their future Internet-based revenue and vital customer

relation activities - so closely to Microsoft?

Microsoft's

commitment to standards and interoperability in the Web services world

is both welcome and critical to large-scale adoption in the marketplace.

The fact that Microsoft has embraced standards and interoperability

with the Java world is evidence that Microsoft recognizes customers

will no longer tolerate proprietary solutions. It's also true that

the .NET offerings will likely capture a large segment of the existing

pool of Microsoft developers and customers. Unfortunately for larger

customers however, this won't produce increased platform choice or

vendor flexibility.

If

future Web usage, cost savings and revenue generation is only half

of what is projected, it is likely that most companies will continue

their investment and support of Java as their strategic platform.

Will .NET gain acceptance and find an important role to play in a

Java/Standards-based world? Absolutely, but only if Microsoft delivers

on their promise to truly support standards. Which raises an interesting

question - does history give anyone reason to believe that Microsoft

is truly interested in customer choice and flexibility based on vendor

neutral standards? Ignorance is bliss.

·

Open source

Not

only big names (IBM, Microsoft, BEA) are behind web services software.

Open Source software and tools exist such as the Apache Tomcat servlet

engine , the JBoss J2EE-based server and Apache AXIS (a Java toolkit

for building and deploying Web service clients and servers) .

According to Thomas Murphy, "Consider the fact that Web services

themselves are built on top of Open Source technologies like HTTP

and TCP/IP, people don't realize the amount of Open Source technology

that underlies everything that they do. Many companies use Open Source

technology areas other than Web services without giving it a second

thought, and so should open themselves to using the technology for

Web services as well. Look at the Internet - the most predominant

server is Apache, which is Open Source, and so people don't really

have problems running Open Source software. And look at the underlying

protocols; they're all Open Source as well."

Those

involved in the Open Source community, not surprisingly, tout the

benefits of Open Source tools for Web services development. Marc Fleury,

founder and president of JBoss says that many commercial Web service

tools are "pricy implementations rushed to the market with poor

quality." He believes that because of this, most commercial vendors

will disappear over time, but that Open Source technology will survive

because of its superior quality. Additionally, he adds, Web services

technology is a "moving target. Many implementations are fighting

for standard status. Going with a free software implementation guarantees

you the maximum probability of going with a standard." It's not

only the Open Source community and analysts who believe that Open

Source technologies are the best solution to Web services development

- those involved in Web services creation and deployment are backers

as well. For example, FiveSight Technologies provides comprehensive

Web services workflow integration and software tools, and they've

built those tools using Open Source software.

Paul

Brown, president of FiveSight, says that "Without using Open

Source, we wouldn't have been able to launch our company. If FiveSight

on its own, or any other company, had to implement XML schema or WSDL

or any other number of Web services technologies in combination, it

would be an impossible task. The Open Source community is a catalyst

for innovation in software, and so I know things like where we can

get a good Open Source implementation of a transaction manager. It's

an opportunity to solve a hard problem by building on work from the

community at large. We've used Open Source in our development work,

from the first piece of software we deployed. We didn't have the money

to pay for developers and staff," and so instead turned to Open

Source software, which already had the software available, to do the

job.

Murphy

also notes the drawbacks. When companies devote themselves to using

Open Source technology for Web services, they're taking on responsibility

for product support and management, since there is no commercial vendor

that takes care of that for them. That means no technical support,

and no clear upgrade paths. There may also be legal issues involved

with Open Source licenses, and so businesses need to have their legal

staffs examine the implications of using Open Source before committing.

Another

issue is that the Open Source community has yet to fully embrace Web

services technologies with open arms. JBoss's Fleury, for example,

says that "Web services isn't real so far. We see zero dollars

in Web services." There are signs, however, that that is changing

and an increasing number of Open Source tools and developers have

turned their attention to Web services.

3.4.

Agile Development

Agile

Development is a collection of methods and practices aimed to reduce

the time and effort required to develop an application, through communication,

simplicity, feedback, courage and humility. Some of its processes

are Adaptive Software Development , Crystal , Scrum , Xbreed , Dynamic

Systems Development Method (DSDM) and Usage-Centered Design (UCD)

. However, the best known agile method is XP (Extreme Programming).

3.5. Extreme Programming

In

the early 1990s, a man named Kent Beck was thinking about better ways

to develop software. Kent worked together with Ward Cunningham to

define an approach to software development that made every thing seem

simple and more efficient. Kent contemplated on what made software

simple to create and what made it difficult. In March of 1996, Kent

started a project using new concepts in software development: the

result was the Extreme Programming (XP) methodology. Kent defined

Communication (programmers communicate with their customers and fellow

programmers), Simplicity (the design is simple and clean), Feedback

(quick feedback by testing the software starting on day one), and

Courage (the programmers are able to courageously respond to changing

requirements and technology) as the four values sought out by XP programmers.

Extreme

Programming (XP) is actually a deliberate and disciplined approach

to software development, and it's successful because it stresses customer

satisfaction. The methodology is designed to deliver the software

required by the customer in the target timeframe, and empowers the

developers to confidently respond to changing customer requirements,

even late in the life cycle. This methodology also emphasizes teamwork.

Managers, customers, and developers are all part of a team dedicated

to delivering quality software. XP implements a simple, yet effective

way to enable groupware style development.

XP

is an important new methodology for two reasons. It is a re-examination

of the software development practices used as standard operating procedures,

and it is one of several new lightweight software methodologies created

to reduce the cost of software. XP goes one step further and defines

a process that is simple and enjoyable.

According

to Kent, software development is a difficult task and the efforts

should be concentrated in the four main activities:

§

Listening - Consists in obtaining information from clients, users,

managers, and business people. The problem must be identified and

the data must be collected for testing purposes.

§

Designing - Some contradictory opinions exist around this topic. Some

think that XP consists in low design, short-sighted. As this is far

from a preferred option, let us consider the other opinion stream:

the design must be performed by the developer and be validated by

the user, but the time spent shall not be extremely high.

§

Coding - The heart of development.

§

Testing - Validate the application, with the help from the customer

in the final phases.

3.5.1.

Extreme Programming and Open Source

·

XP Open Values

The

four XP values fit with the hacker's ethic:

§

Most open source projects rely on communications. As normally the

teams are geographically dispersed, the exchange of written messages

(via e-mail, newsgroups and forums) may have a positive effect as

it allows a record of the discussions and the comment from the community.

§

Simplicity is often present - and sometimes even exaggerated - in

open source projects, which are normally started to satisfy basic

needs of one person or one part of the community.

§

The feedback is one of the most important features of open source

development, as the responses from the community can be based on the

functionality (new features required, conceptual and technical problems)

but are often recommendations on the programming techniques (via the

sharing of the source code) or suggested new routines, functions or

updates.

§

It normally takes a lot of courage to start a new open source project,

as the source code may be analysed and criticised by a huge number

of peer programmers, but also to join an ongoing project and commit

to using the spare time to perform the coding.

·

XP Open Practices

§

Planning - XP assumes you have a customer (or a proxy) as part of

the project. Open Source projects generally don't have a well defined

"customer". Nor is there a single voice for their users.

Instead, Open source projects tend to be guided by a combination of

the vision of their key developers, and the "votes" of their

other developers and users.

§

Small releases - Release early, release often. This is a current practice

in XP and Open Source projects

§

Testing - Automated tests are a key part of XP, as they give the courage

for re-factoring and collective ownership. They are what allow the

small releases (release early, release often XP and Open Source principle)

to happen. Although many Open Source projects include test suites,

this is possibly the best XP practice to be adopted. An Open Source

project that incorporated XP style tests would have enormous advantages.

It would require less oversight and review and it would encourage

more people to contribute because they would have immediate feedback

on whether their changes worked or not. Moreover, it would ensure

that as the software changed, grew and ported, it wouldn't break.

§

Re-factoring - "Re-factoring is the process of changing a software

system in such a way that it does not alter the external behaviour

of the code yet improves its internal structure." Many Open Source

projects are reviewed and re-factored, but often reluctantly. In XP

re-factoring is recommended all the time, so that the design stays

as clean as possible. The fear, of course, is that something will

be broken, but this is counteracted by having automated tests, and

pair (or peer) programming.

§

Pair programming - One of XP recommendations is to always have two

persons working in the same programs. This is rather difficult to

achieve in the real world of commercial development, but compensated

by the Open Source peer programming, with an exponential number of

programmers revising important projects.

§

Collective ownership - In XP collective ownership means that anyone

can change any part of the project - programmers are not restricted

to a certain area of expertise. Open Source projects normally work

differently, having restrictions on the persons allowed to make changes

directly, and the process required for submitting changes. This is

necessary because of the large numbers of contributors. However, in

the Open Source software all the parts of the software are visible

to every programmer, who can always request access to change "restricted"

parts of the code in special cases.

§

Continuous integration - Some Open Source projects integrate contributions

continuously and others group them into releases. Some projects allow

anyone to commit changes whereas others require changes to be submitted

for review. Nevertheless, on an individual level, most hackers do

practice continuous integration.

§

40-hour week - It's hard to apply this to hackers that are working

in their spare time. The main objective of this XP principle, however,

is satisfied by the Open Source definition: motivation.

§

On-site customer - Most Open Source projects don't have a physical

"site" or a specific "customer". However, the

essence of this practice is to have good communications between programmers

and users, and this has already been explained.

§

Coding standards - Most Open Source projects adhere to coding standards,

for the same reasons that XP requires them: To allow different programmers

being responsible for the same code at different times. On smaller

projects, the standards are often informally enforced by the key developer,

who adapts the contributions into his own style. Large projects usually

have an explicit set of standards.

·

Open Source XP software

§

JUnit is a regression testing framework written by Erich Gamma and

Kent Beck. It is used by the developer who implements unit tests in

Java. JUnit is Open Source Software, released under the IBM's Common

Public License Version 1.0 and hosted on SourceForge

§

CurlUnit is a an instance of the xUnit architecture commonly used

as a testing framework within the Extreme Programming methodology

(XP) methodology. Like Junit, CurlUnit is Open Source Software, released

under the IBM Public License and hosted on SourceForge.

Other

resources for Open Source development under XP rules may be found

in http://www.xprogramming.com/software.htm.

·

Final word

Open

source software can be used in Extreme Programming development, and

XP concepts can be adopted by Open Source projects. The design part

should be given more importance, tough, with the integration of XP,

Open Source and MDA. The goal s being to reduce the time and increase

the quality of open source development, and to reduce costs and improve

efficiency and portability of applications developed under XP principles.

3.6.

Open decision

A

good starting point in deciding whether to use Open Source software

is to take a look at what phase a company is, regarding the development

of Web services, says Thomas Murphy, senior program director for Meta

Group consulting firm: "Open Source is ideal for when you need

to keep up with where technology is headed, and for that portion of

your technical staff working in future technologies. It's well suited

for these early stages. But when you're looking to use something for

production and development, that requires a stable release path. So

I think that often, people look for commercial tools at that point."

Another

deciding factor is money. Open Source may be the perfect solution

for companies that haven't decided yet to go full-bore into Web services,

or those that are operating on a shoestring or looking to hold down

costs,.

An

exceedingly important issue, but one that is easy to overlook is the

"cultural" factor, says Eric Promislow, senior developer

specializing in Web services with ActiveState, which provides Open

Source-based applications, tools and support. "Go with Open Source

for Web services development if your staff already uses Open Source

for other purposes, and want to stay with the tools they know and

love," he says. "They know how it works, and they know how

to get support for it from the Open Source community." They'll

be far more productive - and happier in their jobs - than if they

had to use commercial tools.

Promislow

adds that Open Source software is an ideal way for companies to dip

their toes in the water when it comes to Web services development,

and so is suited for companies still deciding whether to seriously

pursue Web services. "They can make no upfront investment except

in time," he says, and so can inexpensively develop pilot projects.

Murphy

warns that there are a series of not-obvious issues that companies

should be aware of when they ultimately decide to go the Open Source

route. "Businesses need to understand that in using Open Source,

they are also taking on support and product management responsibilities,"

he warns. "You need someone to track where the bug fixes are,

and know what the newest features are likely to be. It's not like

a commercial product where someone makes money off support and so

provides it for you - you have to do it yourself." Of course

the existence of service companies around Open Source is increasing

and reducing the effect of this problem.

Another

costly activity may be installing the software. Not all Open Source

software have easy installation routines, and often a commercial implementation

of Open Source software is required to ensure the community support,

the low cost, and technical support.

Open

Source software is built by community, and is a community effort,

and so businesses have to decide up-front how involved their developers

can be in that community. Should companies allow developers not only

to work with Open Source software, but also participate in the community,

and in the community development of the software? Will managers allow

developers to spend company time working on Open Source community

projects? The rules shall be put in place for this ahead of time,

to avoid problems in later project phases.