“Let’s say there are four steps. Four

stages. Four levels. The first Is learning

the language of the dead, the second is to learn the language of the

living, the third is hearing the music of the spheres. The fourth step is

learning to take the first step.” – Dan Simmons in The Fall of Hyperion

The

standardization is an important point to be considered in the evolution of the

different technologies, and so is their openness. It gives developers the

possibility to understand the infrastructure requirements, to create compatible

products, to participate in their evolution, to innovate. This openness may be

well represented in the source code, the “alma mater” of every

computer program. We need to understand “Open Source” as the concept created by

computer programmers united under the hackers ethic. However, we should not be limited by it: We shall go one

abstraction level further to define the “Open” concept, by analysing also the

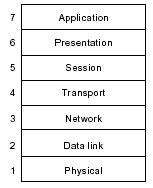

Open Systems, the Open Standards and the Open Platforms. The first part of the analysis will be

divided into three different tiers, which compose together the realm of IT and

Internet today. They are the infrastructure, the development and the

information exchange (see figure below).

Figure 1 – The three tiers under the scope of the first part of this analysis

Let’s

start by the bottom-level tier, and discuss in the first chapter the hardware and the operating systems used to build the Internet infrastructure. Different alternatives are investigated, trying to

understand their original goals, their evolution, their current situation and

the tendencies. The analysis is always focused on the applicability of the

platforms as servers taking part on the Internet infrastructure. And what is the base of Internet? The standards. They participated in the spread

of the Net from the very beginning, so they do in this document. The goal is

to understand what are the different hardware platforms, the software environments, their concepts, history and targets, and how they

became standards. We will also analyse what

benefits we may expect from the open environments, together with the level of

openness available from the common proprietary alternatives. We conclude with the role of standards on the creation of network protocols used worldwide, tantamount for the success of Internet.

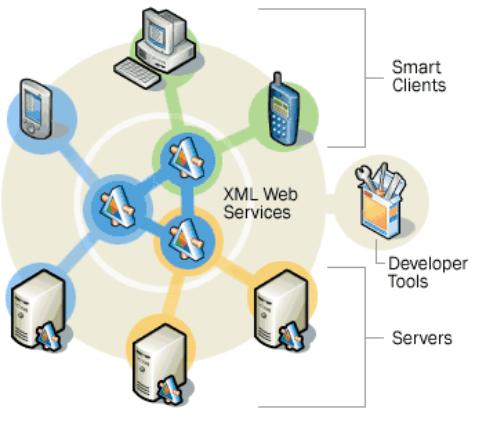

In the

second tier - development - we can consider the ongoing battle for a common web

platform and the role of the web services. This is excellent for a case

study, as we have three different warriors: An open source, an open platform,

and a proprietary solution. Additionally we will analyse the usage of common rules

to exchange information about the design of applications and data, with the goal of technology

independence: MDA and UML. We briefly discuss the extreme

programming concepts and their relation with the open source products and

projects.

The

analysis ends with the third tier – information exchange - by exploiting the

benefits of a common language to exchange information and data definitions,

coupled and structured. This is done by XML and its derivates.

The aim

of the second part is to analyse the technologies by a broader standpoint. We

should be able to trace some conclusions and imagine future trends and proposals.

Using the cases detailed in the first part, we will analyse some of the basic

concepts around the network society and the new economy, trying to understand the impact

of standards in the usage of technological power, the strategies currently used

by the enterprises, the comparative elements between open and proprietary

solutions. Finally, we will discuss the role of politics, to enforce or stimulate

the usage of open technology.

This

study contains background material that introduces some important topics for

readers who are not familiar with them. References are provided for those who

want more complete understanding. The hyperlinks fans should refer to the Appendix A, on page 107, and feel free to use the online version on www.k-binder.be/Papers/, which intend

to be often up-to-date. As an avant-goût, the basic concepts used during the rest of

the document.

To

define the Open general concept, under the scope of this study, let us

consider two definitions, with the help from the dictionary:

Open

1: having no enclosing or confining barrier:

accessible on all or nearly all sides

5: not restricted to a particular group or

category of participants

Based on

this, we can consider as “Open” any product, concept, idea or standard:

§

That is freely available to be

researched, investigated, analysed and used with respect to the intellectual

property;

§

That can be adapted, completed and

updated by any person or company interested in improving its quality or the functionality, possibly coordinated by

a person or organization.

Let us now

analyse the open model, used for Open source development and the establishment

of open standards.

·

Definition

Historically, the makers of proprietary software have generally not made

source code available. Defined as “any

program whose source code is made available for use or modification as users or

other developers see fit”, open source

software is usually developed as a public collaboration and made freely

available.

Open Source is a certification mark

owned by the Open Source Initiative (OSI). Developers of open software (software intended to be

freely shared, potentially improved and redistributed by others) can use the

Open Source trademark if their distribution terms conform to the OSI's Open

Source Definition. All the

terms below must be applied together, for the product and its license, and

in all cases:

§

Free Redistribution – The software being distributed must be

redistributed to anyone else without any restriction.

- Source Code – The source code must be made available (so that the

receiving party will be able to improve or modify it)

- Derived works – The license must allow

modifications and derived works, and must allow them to be distributed

under the same terms as the license of the original software.

- Integrity of the Author’s Source Code – The license must explicitly permit

distribution of software built from modified

source code. The license may require derived works to carry a different

name or version number from the original software.

- No discrimination against persons or groups

- No discrimination against fields of endeavour – It may not

restrict the program from being used in a business, or from being used for

a specific type of research.

- Distribution of license – The rights attached

to the program must apply to whom the program is redistributed without the

need for execution of an additional license by those parties. In other

words, the license must be automatic, no signature required.

- License must not be specific to a product – A product

identified as Open Source cannot be free only if

used in a particular brand of Linux distribution.

- License must not contaminate other software – The license must not place

restrictions on other software that is distributed along with the licensed

software.

As

Perens summarizes, from the programmer’s standpoint, these are the rights when

using Open Source programs:

§

The

right to make copies of the program, and distribute those copies.

- The right to have access to

the software’s source code, a necessary preliminary before you can change it

- The right to make

improvements to the program

·

Licenses

There

are many different types of licenses used by Open Source products. The most common are:

§

Public

Domain – A public-domain program is one upon which the author has

deliberately surrendered his copyright rights. It can’t really be said to come

with a license. A public domain program can

even be re-licensed, its version removed from public domain, with the author

name being replaced by any other name.

§

GPL (GNU General Public License) – the GPL is a political manifesto as well

as a software license, and much of its text is

concerned with explaining the rationale behind the license. GPL satisfies the

Open Source Definition. However, GPL does not guarantee the integrity of the

author’s source code, forces the modifications to be

distributed under the GPL and does not allow the incorporation of a GPL program

into a proprietary program.

§

LGPL (GNU Library General Public License) – The LGPL is a derivative of the

GPL that was designed for software libraries. Unlike the GPL, a LGPL program can be incorporated into

a proprietary program.

§

X,

BSD and Apache – These licenses let you do nearly anything with the software licensed under them. This is because their origin was to cover

software funded by monetary grants of the US government.

§

NPL (Netscape Public License) – This license has been prepared to give Netscape the privilege of re-licensing

modifications made to their software. They can take those

modifications private, improve them, and refuse to give the result to anybody

(including the authors of the modifications).

§

MPL (Mozilla Public License) – The MPL is similar to the NPL, but does not contain the clause

that allows Netscape to re-license the modifications.

|

License

|

|

Modifications

can be taken private and not returned to their authors

|

Can be

re-licensed by anyone

|

Contains

special privileges for the original copyright holder over the modifications

|

|

Public

Domain

|

Ö

|

Ö

|

Ö

|

|

|

GPL

|

|

|

|

|

|

LGPL

|

Ö

|

|

|

|

|

BSD

|

Ö

|

Ö

|

|

|

|

NPL

|

Ö

|

Ö

|

|

Ö

|

|

MPL

|

Ö

|

Ö

|

|

|

Table 1 – Comparison of Open

Source licensing practices

·

Open Source software engineering

The

traditional software engineering process generally consists of marketing requirements, system-level

design, detailed design,

implementation, integration, field-testing, documentation and support. An Open

Source project can include every single one of these elements:

§

The

marketing requirements are normally discussed by using a mailing list or

newsgroup, where the needs from one member of the community are reviewed and

complemented by the peers. Failure to obtain consensus results in “code splits”,

where other developers start releasing their own versions.

§

There

is usually no system-level design for a hacker-initiated Open Source development. A basic design allows the first release of code to be

built, and then revisions are made by the community. After some versions, the

system design is implicitly defined, and sometimes it is written down in the

documentation.

§

Detailed

design is normally absent of pure open source initiatives, mostly because

most of the community is able to read the code directly, interpret the

routines, functions and parameters, and modifying them as required. This makes

further development more difficult and time-consuming.

§

Implementation

is the primary motivation for almost all Open Source software development effort ever expended. It is how most programmers

experiment with new styles, ideas and techniques.

§

Integration

usually involves organizing the programs, libraries and instructions in such a

way they can be used by users in other systems and equipments to effectively

use the software.

§

Field-testing

is one of the major strengths of Open Source development. When the marketing phase has been effective, many

potential users are waiting for the first versions to be available, and willing

to install them, test and make suggestions and correct bugs.

§

The

documentation is usually written in a very informal language, free style and

usually funny way. Often websites are created to allow the documentation to be

provided and completed by the user community, and becomes a potential source

for examples, “tips and tricks”. One of the brightest examples is the online

documentation for PHP.

§

The

support is normally provided via FAQS and discussion lists or even by the

developers themselves by e-mail, in a “best effort” basis, depending on their

availability, willingness and ability to answer the question or correct the

problem. The lack of official support can keep some users (and many companies)

away from Open Source programs, but it also creates opportunities for consultants or

software distributors to sell support contracts and/or enhanced commercial

versions.

The

commercial versions of Open Source software (like BSD, BIND and Sendmail) often use the original Open

Source code, developed using the hackers’ model, later refined by most of the phases described above.

·

Open-source cycle

Many

Open Source software start with an idea, discussed via the Internet, developed by the community,

implemented as first draft versions, and consolidated into final versions after

a lot of debugging. After this final software starts to be used globally, and

interesting companies, the authors may decide to start receiving some financial

compensation for their hard work.

Then an

organization may be created, or some existing company can start to distribute

the product alone or bundled with other similar pieces of software. Sometimes, after a reasonable

funding is raised and more development is done (now remunerated) a commercial

version of the product may be released, often with more functionality than the

free version, and sometimes without the source code being generally distributed. This is attributed to the difficulty

of small companies to remain profitable by distributing only open source

software.

·

Open Source Science

As

argued by DiBona, Ockman and Stone,

“Science is ultimately an Open Source enterprise. The scientific method rests on a process of discovery, and a process of justification.

For scientific results to be justified, they must be replicable. Replication is

not possible unless the source is shared: the hypothesis, the test conditions,

and the results. The process of discovery can follow many paths, and at times

scientific discoveries do occur in isolation. But ultimately the process of

discovery must be served by sharing information: enabling other scientists to

go forward where one cannot; pollinating the ideas of others so that something

new may grow that otherwise would not have been born.”

Ultimately,

the Open Source movement is an extension of the scientific method, because at the heart of the

computer industry is computer science. Computer science differs from all other

sciences, by having one means of enabling peers to replicate results: share the

source code. To demonstrate the validity of

a program, the means to compile and run the program must be provided.

Himanen

considers that the scientists have developed this method “not only for ethical reasons but also because it has proved to be the most successful

way of creating scientific knowledge. All of our understanding of nature is based on this

academic or scientific model. The reason why the original hackers’ Open Source model works so effectively seems to be – in addition to the facts

that they are realizing their passions and are motivated by peer recognition,

as scientists are also – that to a great degree it confirms to the ideal open

academic model, which is historically the best adapted for information

creation”.

One of the important factors in the success

of the Internet comes from the

"governance mechanisms" (rather than regulation) that guide its use

and evolution, in particular, the direct focus on inter-connection and

interoperability among the various constituent networks.

·

Standards

The standards are fundamental for the

network economy. They allow the

companies to be connected by establishing clear communication rules and

protocols. For manufacturing networks, composed by the company with the

product design, the suppliers of different components and the assembly lines,

standards guarantee the compatibility

of all the different parts in the production process. In the information and

technology networks, the standards guarantee the compatibility

of the infrastructure components analysed in the first chapter – hardware, operating systems and application software – and the interoperability

of the companies via the Internet with clear network protocols.

Standardization is by definition a

political, economical and technological process aiming to establish a set of

rules. These are documented agreements containing technical specifications or

other precise criteria to be used as rules, directions or definitions. Thus,

equipments, products, processes and services based in the same set of rules are

fully compatible with each other.

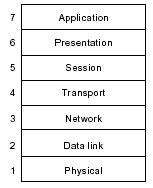

·

De

facto or De jure

Standards are normally classified according to their

nature. De facto standards are products and protocols which conquer the market by

establishing a network of interconnected and

compatible products, and gaining recognition from the consumers. An example is

the set of de facto standards built around the IBM PC specification.

Figure 2 – Examples of de facto

standards and their connectivity

De facto standards are normally preferred by

companies because they don’t need to follow a complex and time-lengthy process

for its validation. Often – as the case of Windows – they are accepted by the market even

without being elaborated by scientific process, and without strong

R&D investments. These are also

the reasons by which they are not easily accepted by normalisation organisations, by the

scientific institutions and by the academic circle.

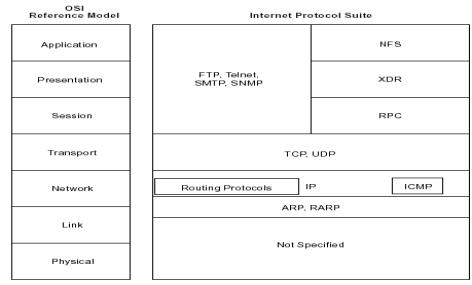

By opposition, de jure standards are often based on a new

technology, created by a company that accept to publish their concepts and

definitions for a review of the academic and scientific communities, and be

officially approved by a normalisation institution. A good example

is the OSI standard and the protocols compatible with its

different tiers (see the Figure

14 on page 35).

Some of the main motivations for this are

the ability to compete with existing dominant standards, to easily found a cooperation network of software and hardware suppliers, to obtain

credibility from the academic world and consequently

gathering the cooperation from the students community.

A good practice from the really independent

and non-profit normalisation organisations is to only

accept to recognise open standards. This is necessary to avoid the formation of monopolies and unfair

commercial practices by the royalty-owners. This has recently been contested

when standards for web services have been analysed.

|

De facto standards

|

De jure standards

|

|

Strengths:

-

Quickly

developed

-

Optimal

solution for the original goal

|

Weaknesses:

-

Lengthy

development

-

Solution can be

used beyond the original goal

-

Compromise of

technical choices

|

|

Weaknesses:

-

Short-sighted

-

Not clearly

defined

-

Stimulates

monopolies (by copyrights)

-

Multiple

solutions for the same type of applications

|

Strengths:

-

Long term

analysis by experts

-

Clear and

complete definitions

-

Stimulates

competition

-

Standard

controlled by independent organizations rather than companies

-

Possibility of

independent certifications

|

Table 2 – Comparison between de

facto and de juri standards

·

Mare liberum or Mare clausum

One standard is known as proprietary when it has been developed by a company,

which remains the owner of royalties that limit the usage of the standard

specifications by the payment of licence fees.

Open Standards are standards with specifications which

are open to the public and can be freely implemented by any developer. Open

standards usually develop and are maintained by formal

bodies and/or communities of interested parties, such as the Free Software/Open Source community. Open standards exist in opposition to

proprietary standards, which are developed and maintained by commercial companies. Open

standards work to ensure that the widest possible group

of contemporary readers may access a publication. In a world of multiple

hardware and software platforms, it is virtually

impossible to guarantee that a given electronic publication will retain its

intended look and feel for all viewers, but open standards at least increase the

likelihood that a publication can be opened in some form.

From a business perspective, open standards help to ensure that product

development and debugging occurs quickly, cheaply and effectively by dispersing

these tasks among wide groups of users. Open standards also work to promote

customer loyalty, because the use of open standards suggests that a company

trusts its clients and is willing to engage in honest conversations with them.

Criteria for open standard products include: absence of specificity to a

particular vendor, wide distribution of standards, and easy and free or low-cost accessibility.

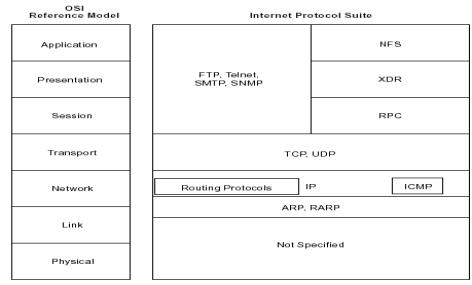

A protocol is the special set of rules

used by different network layers, allowing them to

exchange information and work cohesively. In a point-to-point connection, there

are protocols between each of the several

layers and each corresponding layer at the other end of a communication.

The TCP/IP protocols (TCP, IP, HTTP…) are now quite old and probably much less efficient than

newer approaches to high-speed date networking (e.g. Frame Relay or SMDS). Their success stems from the fact

that the Internet is often today the only

possible outlet that offers a standardized and stable interface, along with a

deliberate focus on openness and interconnection. This makes the Internet

extremely attractive for very different groups of users, ranging from

corporations to academic institutions. By contrast

with traditional telecommunications networks, the rules governing the Internet

focus on interconnection and interoperability, rather than attempting to

closely define the types of applications that are allowed or the rate of return

permitted to its constituents.

Architecture is a term applied to both the

process and the outcome of thinking out and specifying the overall structure,

logical components, and the logical interrelationships of a computer, its

operating system, a

network, or other conception. Architectures can be specific (e.g. IBM 360, Intel Pentium) or reference models

(e.g. OSI). Computer architectures can be divided into five fundamental components

(or subsystems): processing, control, storage, input/output, and communication.

Each of them may have a design that has to fit into the

overall architecture, and sometimes constitute an independent architecture.

As it will be exploited in the chapter 2.2.1 (z/VM and z/OS), the usage of open architectures – even when

maintained by commercial companies - can bring many technical advantages to the

suppliers of the building blocks (Software, hardware and network components) and mainly to the user community,

which can profit from a large network of compatible components, ensuring an

independence and favouring concurrence, creativity and innovation. The economic potential will be analysed in details on the chapter 6.3 (Feedback).

The term platform may be used as a synonym

for architecture, and sometimes may be used to designate their practical

implementations.

·

Certification

Certify is to validate that products,

materials, services, systems or persons are compliant to the standard

specifications. One chapter of the standard definitions is always dedicated to

the certification steps to be performed, and the specifications to be verified.

The certification process may be performed

by any entity. However, independent organizations are normally responsible for

the certification process, which may be expensive and require specialized

technological knowledge.

“It’s the question that drives us. The

question that brought you here. You know the question,

just as I did.” Trinity in The Matrix

“Technology is neither good nor bad, nor is

it neutral” – Melvin Kranzberg

According to Patrick Gerland, “the three

essentials of computer technology are: hardware - the physical machinery that

stores and processes information -, software - the programs and

procedures that orchestrate and control the operation of hardware - and people

who know what the hardware and software can do and who accordingly design and implement applications

that exert appropriate commands and controls over hardware and software to

achieve desired results”.

Despite the broad discussion about open

source and its influence on the evolution of hardware and software,

there’s a general lack of knowledge about the different types of operating

systems, their original and current targets, and their relationship with the

hardware platforms and architectures. For a better understanding of the further

topics, let’s start by discussing this infrastructure and analyse the status of

the hardware and basic software. This analysis will also solidify the

definition of architecture, standards and protocols, to be used on the following chapters. We will see, for example,

that new concepts as Open Source and ASP are largely inspired in their parents Open

Systems and Time Sharing.

The hardware and software components are sometimes

generically referred to as middleware.

Hardware is a broad term. It covers all the

equipment used to process information electronically, and it’s traditionally

divided into two main categories: computers and peripherals.

In this section, we will concentrate our analysis in the evolution of the

computers, from owned machines into commodities. Peripheral references to the

peripherals will be done. Let us consider the division of the computer into

servers and clients, discuss the traditional server families, and the new

concept of servers. After, we will briefly analyse the machines in the

client-side.

In the

beginning, they were simply called computers. And they were large-scale

computers. In the mid-1980’s, after the surge of the personal computers, we started to classify the

computers according to their capacity, their price, their “original” goal and

their target users. This classification remained until the end of the 1990s,

and the three different families will now be briefly described.

Let’s

not talk about the big computers filling entire rooms, weighting tons and

composed by valves.

This is not a mainframe any more, for a long time. Let’s think about relatively

small servers (they are often not larger than a fridge) with big power

capacity, enough to run online applications for thousands of users spread over

different countries, and to process batch jobs which can process millions of

nightly database updates. They have never been sexy (and this was not the goal:

we are talking about a period between 1970 and 1985, when graphics, colours,

sound and animation were not part of the requirements for a professional

computer application) and today it seems that the only alternative for them to

survive is to mimic and co-operate with open systems environments.

Usually mainframe applications

are referred to as “legacy” (“Something received from the

past”).

One can argue the usage of this term,

mainly because of the undeniable stability and performance of these machines

and considering that most of the core applications for big companies are still

processed using mainframe computers, connected with computers from other

platforms to get profit from a better user interface.

Usually mainframe applications

are referred to as “legacy” (“Something received from the

past”).

One can argue the usage of this term,

mainly because of the undeniable stability and performance of these machines

and considering that most of the core applications for big companies are still

processed using mainframe computers, connected with computers from other

platforms to get profit from a better user interface.

Current

mainframe brands are IBM zSeries, Hitachi M-Series, Fujitsu-Siemens PrimePower 2000 and BS2000 and Ahmdal Millenium.

The term mainframe is also being marketed by some vendors to designate high-end

servers, which are a direct evolution from the mid-range servers, with a

processing capacity (and price) aiming to compete with the traditional

mainframes.

Figure 3 – IBM ZSeries 900

This is

a difficult assumption to make. The capacity of the machines in this category

is increasing fast, and they are already claiming to be high-end servers, and

winning market share from the mainframes. Let’s consider it a separate

category, for the moment.

Once

upon a time, there were the minicomputers.

They used to fill the mid-range area between micros and mainframes. As of 2001,

the term minicomputer is no longer used for the mid-range computer systems, and

most are now referred to simply as servers.

Mid-range servers are machines able to perform complex processing, with

distributed databases, multi-processing and multi-tasking, and deliver service

to the same number of users than a high-end server, but with lower cost and often

with an inferior level of protection, stability and security.

The main

suppliers in this area are HP and Sun.

Figure 4 – HP RISC rp8400

Initially

the microcomputers were used as desktop, single-user machines. Then they

started to assume functions from the minicomputers, like multi-tasking and

multi-processing. Nowadays they can provide services to several users, host web

applications, function as e-mail and database servers. Initially each server was used for a different function, and they were connected via the

network. This is called multi-tier

server architecture. With the evolution of the processing speed, today

each server may be used for several functions.

They may

also work as metaframes, providing service to users connected via client or

network computers. All the applications run in the server side (that is required to have a very good processing capacity),

which exchange the screens with the clients (that can be relatively slow).

There

are several suppliers in this area, like Dell and Compaq.

Figure 5 – DELL Poweredge 1600SC

Figure 6 – Traditional Server

families

The

classification categories discussed before are becoming more difficult to

define and the division lines are blurring. The new generation of servers span

a large range of price and processing capacity, starting by low-entry models,

until powerful high-end servers, which can be tightly connected into clusters.

This methodology is implemented – in different ways – by the most part of the

hardware suppliers in the server market.

Figure 7 – The new generation of

servers

The

differences between the entry-level and high-end servers depend on the

architecture and the supplier. They may imply the number of parallel

processors, the type and capacity of each processor, the memory available,

cache, and connections with the peripherals. The clusters are normally used for

applications demanding intensive calculations, and are composed by several

servers connected by the usage of high-speed channels (when they are situated

in the same space) or high-speed network links (when the servers are geographically dispersed to provide

high availability in cases of natural disasters). There are many different ways

to implement the clusters to obtain a single point of control and maintenance,

treating the cluster almost as a single server – without creating a single point of failure, which could imply

the complete unavailability of the cluster in case of problems with one of the

elements.

Estimate

the throughput for future applications is only possible with a good capacity

planning, prepared by experimented specialists. To find a server – as performance tests with the real charge are quite difficult to

be elaborated - there are several benchmarks, organized by recognized

independent companies, each one with a clear advantage for a different

supplier. As the cost is extremely important - and when the hardware supplier participates in the capacity planning – a Service Level

Agreement (SLA) may specify guarantees that the

capacity installed will satisfy the estimated demand.

However,

another differentiating factor, which is gaining importance nowadays, is the

openness of the hardware and operating systems. This will be largely exploited further in

this document.

The

current challenge, in the quest for the holy server, is to build flexible, scalable

and resilient infrastructures, able to respond to unexpected surges in traffic

and use. The solution may be found by implementing concepts like grid computing

and autonomic computing, which are highly dependent on open standards and tightly related to open source.

·

Grid Computing

Grid computing

is a new, service-oriented architecture that embraces heterogeneous systems and

involves yoking together many cheap low-power computers - dispersed

geographically - via open standards, to create a system with the

high processing power typical of a large supercomputer, at a fraction of the

price. The goal is to link – mainly through the Internet – computers and peripherals, by cumulating their processing and

stocking capacities. All the connected systems may then profit from the set of

assembled resources – like processing capacity, memory, disk, tapes, software and data – that are global and virtual at the same time.

The

challenges are to connect different machines and standards, to develop software able to manage and distribute the resources over the network, to create a development

platform enabling programs to make profit of the parallel and distributed

tasks, security issues (authentication, authorisation and policies) and to develop

reliable and fast networks.

The

basic principle is not new, as the HPC (high performance computing) technology is already used in

academic and research settings, and the peer-to-peer has already been used to create a large network of personal computers (e.g. SETI@home). The target now is to expand

this technology to the business area via associations like the Global Grid Forum (assembling more than 200 universities, laboratories and

private companies).

Another

initiative is the Globus project, formed by many American

research institutes, responsible for Globus toolkit, which uses Open Source concepts for the basic software development, and has created the Open Grid Services Architecture (OGSA), a set of open and published

specifications and standards for grid computing (including SOAP, WSDL and XML). The OGSA version 1.0 has been

approved in December 2002. Other similar projects are Utility Computing (HP) and N1 (Sun).

With the costs to acquire, deploy, and

maintain an expanding number of servers weighing down potential productivity

gains, the market is shifting toward various concepts of service-centric

computing. This may hold deep potential to not only lower the capital and

operational costs of a data centre, but also to impart that infrastructure with

the increased availability and agility to respond to an ever-changing business

environment.

·

Autonomic Computing

As IT

systems become more complex and difficult to maintain, alternative technologies

must manage and improve its own operation with minimal human intervention.

Autonomic computing is focused in making software and hardware that are self-optimising,

self-configuring, self-protecting

and self-healing. It is

similar to the grid concepts, by embracing the development of intelligent, open

systems that are capable of adapting to varying circumstances and preparing

resources to efficiently handle the workloads placed upon them. Autonomic

computers aren’t a separate category of products. Rather, such capabilities are

appearing in a growing number of systems.

The

objective is to help companies more easily and cost-effectively manage their

grid computing systems. It’s expected that when autonomic computing reaches its

full potential, information systems will run themselves based on set business

policies and objectives. The implementation of such a system start with a trial

phase, in which the system suggests actions and then wait for approval. After a

fine-tuning of the rules, the system may run unattended.

It’s

believed that only a holistic, standards-based approach can achieve the

full benefits of autonomic computing. Several standards bodies – including the Internet Engineering Task Force, Distributed Management Task Force and

Global Grid Forum – are working together with private companies to leverage

existing standards and develop new standards where none exist. Existing and emerging standards relevant to autonomic computing include:

- Common Information Model

- Policy, Simple Network Management

Protocol (IETF)

- Organisation for the

advancement of structured information standards

- Java management extensions

- Web Services Security

One

example of current technologies using autonomic components is disk servers with

predictive failure analysis and pre-emptive RAID reconstructs, which are designed to monitor the system health and

to detect potential problems or systems errors before harming the data.

Performance optimisation is also obtained via intelligent cache management and

I/O prioritisation.

In the

beginning of the personal computers, hobby was the main objective,

and there were several different platforms (Amiga, Commodore, TRS80, Sinclair, Apple, among others) completely

incompatible. BASIC

was the common language, although with different implementations. Then, in 1981,

IBM created the PC – the first small computer to be able to run business applications

- and in 1984, Apple released the Macintosh – the first popular graphical platform. The era of microcomputers

started.

The natural

evolution of the microcomputers, today’s desktops are able to run powerful

stand-alone applications, like word processing and multimedia production. They are normally based on Intel or Macintosh platforms and used by small businesses or home users. They are

much more powerful when connected to one or more servers, via network or the Internet. They become clients, and can be

used to prepare and generate requests that are processed by the servers, and

then receive, format and display the results.

Most of

the desktop market is dominated by computers originated from the IBM PC architecture, built from several suppliers (and even sold in

individual parts) around Intel and AMD processors. Apple is also present – with a different architecture - traditionally in

the publishing and multimedia productions.

Figure 8 – Desktop x Network

computers

Recent

evolutions from the desktops, the Network Computers (also called WebPC or NetPC) are also used as clients, with

less power and more flexibility. Normally these computers have built-in

software, which is able to connect to the

network and fetch the needed applications from the servers, or simply use

the applications running on the servers themselves.

The main

objective is to allow shops and sales persons to work from anywhere in the

world in the same way, with the same profile, even if changing the hardware interface. It also allows a better level of control over

personalization, as all the information is always stored in the server, the client working simply as

the interface.

Main

suppliers are IBM and Compaq, with network computers working as PC clients, and Citrix with a proprietary client able to connect only to Citrix servers and obtain a perfect

image of PC client applications via the network. Similar technology can be

found under the names “thin clients” and “smart display”.

To

support the development of network computing, the company Sun developed a new language (Java), aiming to guarantee the

independence of the hardware equipment or platform by the usage of Virtual Machines.

The idea

is to connected small devices than laptops to the network. The PDAs are largely used today, and have the capacity to work in network,

even if the communication costs avoid many to use them to send e-mails and to

connect to the Internet.

With the

decreasing size of the chips, and with the development of wireless protocols – like bluetooth and WiFi - some technology “hype-makers” are trying to convince the general

public that they need every single appliance connected to the network. Although with some interesting

applications, most of them are simply gadgets. With the current economic

crisis, there is a low probability that such products will have a viable

commercialisation soon.

Some

companies are using these technologies in warehouse management systems, to

reduce costs and increase the control on stock and product tracing. Also

current is the usage of Linux in set-top boxes, able to select TV channels,

save programs in hard disks for later viewing, filter channel viewings

according to license keys.

See also

chapter 2.3.4 (Trend: Open Spectrum).

As we already discussed, hardware is the equipment used to

process information. An Operating System is the system software responsible to manage the

hardware resources (memory, Input/Output operations, processors and

peripherals), and to create an interface between these resources and the

operator or end-user.

Originally,

each hardware platform could run its own operating system family. This is not true any more. Here we are going to briefly

analyse some examples of well-known operating systems, and present some

historical facts related to standards, architectures and open source.

Later we are going to discuss the initial dependence between the operating

systems and the architecture, and how this has changed.

With a history started in 1964,

IBM mainframe operating systems are currently z/VM and z/OS (a third one, VSE/ESA, is only kept for compatibility

issues). They share the same architecture (currently called z/Architecture)

and their main characteristics are:

With a history started in 1964,

IBM mainframe operating systems are currently z/VM and z/OS (a third one, VSE/ESA, is only kept for compatibility

issues). They share the same architecture (currently called z/Architecture)

and their main characteristics are:

§

z/VM was originally built to ease the machine resources sharing by

creating simulated computers (virtual machines), each running its own operating

system.

z/VM was originally built to ease the machine resources sharing by

creating simulated computers (virtual machines), each running its own operating

system.

§

z/OS - has

always been the operating system used by large IBM systems, offering a great level of availability and manageability.

IBM has been the leader of the mainframe commercial market since the

very beginning, with strong investments in R&D and marketing strategies, and with a determination to use common

programming languages (like COBOL) in the conquest of programmers

and customers. One key factor for IBM’s success is standardization. In the 1950s, each computer

system was uniquely designed to address specific applications and to fit within

narrow price ranges. The lack of compatibility among these systems, with the

consequent huge efforts when migrating from one computer to another, motivated

IBM to define a new ”architecture” as the common base for a whole family of

computers. It was known as the System/360, announced on April 1964,

which allowed the customers to start using a low-cost version of the family,

and upgrade to larger systems if their needs grew. Scalable architecture

completely reshaped the industry. This architecture introduced a number of the

standards for the industry - such as 8-bit bytes and the EBCDIC character set

- and later evolved to the S/370 (1970), 370-XA (1981), ESA/370 (1988), S/390 (1990) and

z/Architecture (2000). All these architectures were backward compatible,

allowing the programs to run longer with less or no adaptation, while convincing

the customers to migrate to the new machines and operating systems versions to

profit from new technologies.

The

standardization of the hardware platforms, by using a common architecture, helped to unify the efforts

from the different IBM departments and laboratories spread over the world. The usage of a

common set of basic rules was also fundamental in the communication among the

hardware and software departments, which allowed the existence of the three different

operating system families, able to run in the same hardware.

This

architecture is proprietary (privately owned and controlled - the

control over its use, distribution, or modification is retained by IBM), but its principles and rules

are well defined and available to the customers, suppliers and even the competitors. This openness allowed its

expansion by:

- Hardware - Other companies

(OEM - Original

Equipment Manufacturer) started to build hardware equipment full compatible

with the IBM architecture (thus allowing

its use together with IBM hardware and software), and often with advantages on pricing, performance or additional

functions. This gave a real boost on the IBM architecture, by giving the

customer the advantage of having a choice, while enforcing the usage of

its standards and helping it to sell even

more software and hardware.

- Software – Initially IBM was dedicated to building

operating systems, compilers and basic tools. Many companies (ISV - Independent Software Vendors) started to develop software to complement this basic

package, allowing the construction of a complete environment. The end-user

could then concentrate in the development of its applications, while

buying the operating system and the complementary tools

from IBM and the ISV.

- Services – As the IBM architecture became the

standard for large systems, consulting companies could then specialize in

this market segment, providing standard service offerings around the

implementation of the hardware components, operating

systems and software. With the recent increase on the demand for this type of service,

mainly due to the Y2K problem, the Euro

implementation and the outsourcing hype, most of the infrastructure

services in large environments have been done by external consultants.

There’s

also a dark side: several times, IBM has been accused of changing the architecture without giving

enough time to the competitors to adapt their hardware and software. Only when the competition from

other high-end servers suppliers increased, IBM has been forced to review this

policy, creating the concept of “ServerPac”. The operating system started to be bundled with ISV software, giving the user a certain guarantee of compatibility.

A second

problem is the complete ownership of the standards, by IBM. Even if other companies found

good ways of implementing new technologies, thus improving the quality of the service or the performance, they always needed to adapt

their findings to fit in the IBM architecture.

This limited the innovation coming from the OEM companies as they could rarely think about improvements that would

imply architectural changes. On the other hand, the customers were never sure

if the changes imposed by IBM were really needed, or simply another way of

forcing them to upgrade the operating systems version or the hardware equipment with an obvious financial benefit for IBM.

A third

aspect is related to the availability of the code source of the operating

systems and basic software. Originally, IBM supplied the software with most part of the source code and a good technical documentation about the software’s internal

structures. This gave the customers a better knowledge about the architecture

and the operating systems, allowing them to analyse problems independently, to

drive their understanding beyond the documentation, and to elaborate routines

close to the operating systems. After, they created the “OCO” (Object Code Only) concept, which persists today.

The official reason behind this was the difficulty for IBM to analyse software

problems, due to the increasing number of customers that started to modify the

IBM software to adapt them to local needs, or to simply correct bugs. In fact,

by hiding the source code IBM also avoided that customers discovered flaws and

developed interesting performance upgrades. This also helped to decrease the

knowledge level from the technical staff, which is limited today to follow the

instructions given by IBM, when installing and maintaining the software. The

direct consequence was a lack of interest for the real system programmers and

students, who have been attracted to UNIX and Open Source environments. A positive consequence of the OCO policy is the

quicker migration from release to release. Since the code is not modified, but

instead APIs (Application Programming Interfaces)

can be used to adapt the system to each customer needs.

These

factors were crucial to create a phenomenon called downsizing: Several

customers, unhappy with the monopoly in the large systems, and with the high

prices practiced by IBM and followed by the OEM and ISV suppliers, started to migrate their centralized (also called

enterprise) systems to distributed environments, by using midrange platforms

like UNIX. In parallel (as it will be

discussed on chapter 2.2.2), there were many developments of applications and

basic software in the UNIX platforms, the most important around the Internet. To counterattack, IBM decided

to enable UNIX applications to run in their mainframe platforms: MVS (the Open

Edition environment, now called UNIX System Services) and VM (by running Linux as guest operating systems in virtual machines). IBM major effort

today is to create easy bridges between those application environments, and to

convince the customers to web-enable the old applications, instead of rewriting

them.

Important is to notice that the standardisation on the hardware level was not abstracted to the operating system level. As mentioned above IBM always maintained three different operating systems, each one

targeted to a different set of customers. This was not always what IBM desired,

and in every implementation of a new architecture level (e.g. XA, ESA), the rumours were that the

customers would be forced to migrate from VM and VSE to MVS, which would become the only supported environment. This is

still true for the VSE (note that there’s no z/VSE announced yet), but z/VM became a strategic environment allowing IBM to implement Linux in all hardware platforms.

Usually, the mainframe implementations are highly standardized. As the

professionals in this domain understood the need for standards, the creation of company

policies is done even before installing the systems.

"Unix was the distilled essence of

operating systems, designed solely to be useful. Not to be marketable. Not to

be compatible. Not to be an appendage to a particular kind of hardware. Moreover a computer running Unix was to be useful as a computer,

not just a `platform' for canned `solutions'. It was to be programmable -

cumulatively programmable. The actions of program builders were to be no

different in kind from the actions of users; anything a user could do a program

could do too...." (McIlroy - Unix on My Mind)

An analysed by Giovinazzo, “while it may seem by today’s standards that a universal operating

system like UNIX was inevitable, this was not always the case.

Back in that era there were a great deal of cynicism concerning the possibility

of a single operating system that would be supported by all platforms. (…)

Today, UNIX support is table stakes for any Independent Software Vendor (ISV) that

wants to develop an enterprise class solution”.

An analysed by Giovinazzo, “while it may seem by today’s standards that a universal operating

system like UNIX was inevitable, this was not always the case.

Back in that era there were a great deal of cynicism concerning the possibility

of a single operating system that would be supported by all platforms. (…)

Today, UNIX support is table stakes for any Independent Software Vendor (ISV) that

wants to develop an enterprise class solution”.

It may surprise some people but the

conception of UNIX (MULTICS)

started almost in the same time than the IBM System/360, and

the first UNIX edition was released just after the IBM System/370, in November 1971.

MULTICS was developed by the General Electric Company, AT&T Bell Labs

and the Massachusetts Institute of Technology to allow many users to access a single

computer simultaneously, allowing them to share data and the processing cost.

Bell Labs abandoned the project and used some of MULTICS concepts to develop

UNIX on a computer called DEC PDP-7, predecessor of the

VAX computers.

The “C” language has been created under

UNIX and then - in a

revolutionary exploit of recursion - the UNIX system itself has been rewritten

in “C”. This language was extremely efficient while relatively small and

started to be ported to other platforms, allowing the same to happen with UNIX.

Besides portability, another important

characteristic of the UNIX system was its simplicity

(the C logical structure could be learnt quickly, and UNIX was structured as a

flexible toolkit of simple programs). Teachers and students found on it a good

way to study the very principles of operating systems, while learning UNIX more

deeply, and quickly spreading UNIX principles and advantages to the market.

This helped UNIX to become the ARPANET (and later Internet)

operating system by excellence. The universities started to

migrate from proprietary systems to the new open

environment, establishing a standard way to work and communicate.

Probably one of the UNIX most innovations was its original

distribution method. AT&T could not market computer

products so they distributed UNIX in source code, to

educational institutions, at no charge. Each site that obtained UNIX could

modify or add new functions, by creating a personalized copy of the system.

They quickly started to share these new functions and the system become

adaptable to a very wide range of computing tasks, including many completely

unanticipated by the designers.

Initiated by Ken Thompson,

students and professors from the University of California-Berkeley continued to enhance UNIX,

creating the BSD (Berkeley Software

Distribution) Version 4.2, and distributing it to many other universities.

AT&T distributed their own

version, and were the only able to distribute commercial copies.

In the early 80s the microchip and

local-area network started to have an important

impact on the UNIX evolution. Sun Microsystems used the Motorola 68000 chip to provide an

inexpensive hardware for UNIX. Berkeley UNIX developed built-in support for the DARPA Internet protocols, which encouraged further growth of the Internet.

“X Window” provided the standard for graphic workstations. By 1984 AT&T started to commercialise

UNIX. “What made UNIX popular for business applications was its timesharing,

multitasking capability, permitting many people to use the mini- or mainframe;

its portability across different vendor's machines; and its e-mail capability”.

Several computer manufacturing companies

- like Sun, HP, DEC and Siemens - adapted AT&T and BSD UNIX distributions to their own machines, trying to seduce new users by developing new functions to benefit from hardware differences. Of course, the more differences between the UNIX

distributions, more difficult to migrate between platforms. The customers

started to be trapped, like in all other computer families.

As the

versions of UNIX grew in number, the UNIX System Group (USG), which had been formed in the

1970s as a support organization for the internal Bell System use of UNIX, was

reorganized as the UNIX Software Operation (USO) in 1989. The USO made several

UNIX distributions of its own - to academia and to some commercial and

government users –and then was merged with UNIX Systems Laboratories, to become

an AT&T subsidiary.

AT&T entered into an alliance with Sun Microsystems to bring the best features from the many versions of

UNIX into a single unified system. While many applauded this decision,

one group of UNIX licensees expressed the fear that Sun would have a commercial

advantage over the rest of the licensees.

The

concerned group, leaded by Berkeley, in 1988 formed a special

interest group, the Open Systems Foundation (OSF), to lobby for an

"open" UNIX within the UNIX community. Soon several large companies also

joined the OSF.

In

response, AT&T and a second group of licensees formed their own group, UNIX International. Several negotiations took place, and the commercial

aspects seemed to be more important than the technical ones. The impact of

losing the war was obvious: important adaptations should be done in the

complementary routines developed, some functions – together with some

advantages – should be suppressed, some hardware innovative techniques should be abandoned in the name of the

standardization. When efforts failed to bring

the two groups together, each one brought out its own version of an

"open" UNIX. This dispute could be viewed two ways: positively, since

the number of UNIX versions were now reduced to two; or negatively, since there

now were two more versions of UNIX to add to the existing ones.

In the

meantime, the X/Open Company – company formed in guise to define a comprehensive open

systems environment - held the centre ground. X/Open chose the UNIX system as the platform for the basis of open systems and started

the process of standardizing the APIs necessary for an open operating system specification. In addition, it looked at areas of the system

beyond the operating system level where a standard approach would add value for

supplier and customer alike, developing or adopting specifications for

languages, database connectivity, networking and connections with the mainframe

platforms. The results of this work were published in successive X/Open

Portability Guides (XPG).

In December 1993, one specification was

delivered to X/Open for fast track processing.

The publication of the Spec 1170

work as the proper industry supported specification occurred in October 1994.

In 1995 X/Open introduced the UNIX 95 brand for computer systems guaranteed to

meet the Single UNIX Specification. On 1998 the Open Group introduces the UNIX

98 family of brands, including

Base, Workstation and Server. First UNIX 98 registered products shipped by Sun, IBM and NCR.

There is now a single, open, consensus

specification, under the brand X/Open® UNIX. Both

the specification and the trademark are now managed and held in trust for the

industry by X/Open Company. There are many competing products, all implemented

against the Single UNIX Specification, ensuring competition and vendor choice.

There are different technology suppliers, which vendors can license and build their own product, all of them

implementing the Single UNIX Specification.

Among others, the main definitions under

this specification on version 3 - which is a result of IEEE POSIX, The Open Group and the industry efforts – are the definitions (XBD), the commands and utilities (XCU), the system interfaces and headers (XSH) and the networking services. They are part of the X/Open CAE (Common Applications

Environment) document set.

In November 2002, the joint revision to

POSIX® and the Single UNIX® specification have been approved as an International Standard.

The Single UNIX Specification brand program has now achieved

critical mass: vendors whose products have met the demanding criteria now

account for the majority of UNIX systems by value. UNIX-based systems are sold

today by a number of companies.

UNIX is a perfect example of a constructive way of

thinking (and mainly: acting!), and it proves that the academia and commercial

companies can act together, by using open standards regulated by independent

organisations, to construct an open platform and stimulate technological

innovation.

Companies using UNIX systems, software and hardware compliant with the X/Open specifications can be less dependent of the

suppliers. Migration to another compliant product is always possible. One can

argue that, in cases of intensive usage of “non-compliant” extra features, the

activities required for a migration can demand a huge effort.

Figure 9 – UNIX Chronology

“Every good work of software starts by scratching a

developer’s personal itch” – Eric Raymond

This discussion is not completely separated from the previous one.

Developed by Linus Torvalds, Linux is a product that mimics the

form and function of a UNIX system,

but is not derived from licensed source code.

Rather, it was developed independently by a group of developers in an informal

alliance on the net (peer to peer). A major benefit is that the source code is

freely available (under the GNU copyleft), enabling the

technically astute to alter and amend the system; it also means that there are

many, freely available, utilities and specialist drivers available on the net.

This discussion is not completely separated from the previous one.

Developed by Linus Torvalds, Linux is a product that mimics the

form and function of a UNIX system,

but is not derived from licensed source code.

Rather, it was developed independently by a group of developers in an informal

alliance on the net (peer to peer). A major benefit is that the source code is

freely available (under the GNU copyleft), enabling the

technically astute to alter and amend the system; it also means that there are

many, freely available, utilities and specialist drivers available on the net.

The hackers – not to be confounded with the “crackers” – appeared

in the early sixties and define themselves as people who “program

enthusiastically”

with “an ethical duty (…) to share their

expertise by writing free software and facilitating access to

information and computing resources wherever possible”.

After the collapse of the first software-sharing community

- the MIT Artificial Intelligence Lab

- the hacker Richard Stallman quit his job at MIT in 1984 and started to work

on the GNU system. It

was aimed to be a free operating system,

compatible with UNIX. “Even if GNU had no technical advantage over UNIX, it would have a

social advantage, allowing users to cooperate, and an ethical advantage,

respecting the user’s freedom”.

He started by gathering pieces of free software, adapting them, and developing

the missing parts, like a compiler for the C language and a powerful editor

(EMACS). In 1985, the Free Software Foundation

has been created and by 1990, the GNU system was almost complete. The only

major missing component was the kernel.

In 1991, Linus Torvalds developed a free

UNIX kernel using the FSF toolkit.

Around 1992, the combination of Linux and GNU resulted in a complete free operating system, and

by late 1993, GNU/Linux could compete on stability and reliability with many

commercial UNIX versions, and hosted more software.

Linux was based on good design principles and a good

development model. By opposition to the typical organisation – where any

complex software was developed in a carefully

coordinated way by a relatively small group of people – Linux was developed by

a huge numbers of volunteers coordinating through the Internet.

Portability was not the original goal.

Conceived originally to run on a personal computer (386 processor), later on

some people ported the Linux kernel to the Motorola 68000 series – used in early

personal computers – using an Amiga computer. Nevertheless, the serious effort

was to port Linux to the DEC Alpha machine. The entire code has

been reworked in such a modular way that future ports were simplified, and

started to appear quickly.

This modularity was essential for the new

open-source development model, by allowing several people to work in parallel

without risk of interference. It was also easier for a limited group of people

– still coordinated by Torvalds – to receive all modified modules and integrate

into a new kernel version.

Besides Linux, there are many freely available UNIX and UNIX-compatible implementations, such as

OpenBSD, FreeBSD and NetBSD. According to Gartner, “Most BSD systems have liberal open source licenses, 10

years' more history than Linux, and great reliability and efficiency. But while

Linux basks in the spotlight, BSD is invisible from corporate IT”.

BSDI is an independent company that markets products derived from the Berkeley Systems Distribution (BSD), developed at the

University of California at Berkeley in the 60's and 70's. It is the operating

system of choice for many Internet service providers. It is, as with Linux, not

a registered UNIX system, though in this case there is a common code heritage

if one looks far enough back in history. The creators of FreeBSD started with

the source code from the Berkeley UNIX. Its

kernel is directly descended from

that source code.

Recursion

or fate, the fact is that Linux history is following the same steps than UNIX. As it was not developed using

the source from one of the UNIX distributions, Linux have several technical

specifications that are not (and will probably never be)

compliant with UNIX98. Soon, Linux started to be distributed by several

different companies worldwide – SuSE, Red Hat, MandrakeSoft, Caldera International / SCO and Conectiva, to name some –, bundled

together with a plethora of open source software, normally in two different packages

- one aiming at home users and another for companies. In 2000, two

standardization groups appeared - LSB

and LI18NUX - and have

incorporated under the name Free Standards Group, “organized to accelerate the use and acceptance of open

source technologies through the application, development and promotion of

interoperability standards for open source development”.

Caldera, Mandrake, Red hat and SuSE currently have versions compliant with the

LSB certification.

Still in

an early format, the current standards are not enough to guarantee the compatibility among the different

distributions. In a commercial maneuver – to reduce the development costs – SCO, Conectiva, SuSE and Turbolinux formed a consortium called UnitedLinux, a joint server operating system for enterprise deployment. The software and hardware vendors can concentrate on one major business distribution,

instead of certifying to multiple distributions. This is expected to increase

the availability of new technologies to Linux customers, and reduce the time needed for the development of

drivers and interfaces. Consulting companies can also concentrate the efforts

to provide more and new services.

However, Red hat – the dominant seller in

the enterprise market segment – has not been invited to join the UnitedLinux family before its

announcement. Few expect it to join now. Also missing are MandrakeSoft and Sun Microsystems.

With the help from important IT companies,

it’s expected from the Free Standards Group the same unifying role than the one

played by the Open Group, which was essential for the UNIX common specification.

This is very important to give more credibility for the companies willing to

seriously use Linux on their production

environments. As in the UNIX world, the Linux sellers could still keep their

own set of complementary products, which would be one important factor to

stimulate the competition. The distributions targeting the home users can

continue separated, by promoting the diversity needed to stimulate creativity,

and by letting the natural selection chose the best software to be elected for enterprise

usage. Darwin would be happy. Also would the users.

Linux already proved to be a

reliable, secure and efficient operating system,

ranging from low entry up to high-end servers. It has the same difficulty level

than other UNIX platforms, with the big

advantage of being economically affordable – almost everybody can have it at

home, and learn it by practice. However, is it technically possible for

everyone to install it and use it?

The main argument supporting all the open source

software is that a good support can

be obtained from service providers, besides the help from the open source

community, by using Internet tools like newsgroups and

discussion lists. Although, our question may have two different answers: One

for servers, other for clients.

It is certainly possible for companies to

replace existing operating systems with Linux, and the effort needed is mainly related to the conversion of the

applications than to the migration of the servers or the operating systems.

Several companies have been created using Linux servers to reduce their initial

costs, and they worked with specialized Linux people from the beginning. Most

of the companies replacing other platforms by Linux already have a support

team, with a technological background, responsible by the installation and

maintenance of the servers. Part of this team has already played with Linux at

home and has learnt the basic knowledge. The other part is often willing to learn

it, compare with the other platforms, and to discover what it has that is

seducing the world.

The desktop users have a completely

different scenario. This is because Linux is based on a real operating

system, conceived to be installed and adapted by people with a good

technical background, and willing to dedicate some time for this task. The main

goal of most of the people (except probably the hackers themselves) installing Linux at home is to learn

it, to play around, to compare with the other systems. The Linux main objective

(by using the recursion so dear to the hackers) appears to be Linux itself. In

its most recent releases, even if the installation process is not difficult as

before, simple tasks as adding new hardware, playing a DVD, writing a CD or installing another piece of

software, can quickly become nightmares. Linux can’t yet match Windows on plug-and-play digital media or the more peripheral

duties.

One should continue to be optimist and hope that the next versions will finally

be simpler. The open source community is working on it, via the creation of the

Desktop Linux Consortium.

·

Ubiquity … and beyond!

Linux is considered the only

operating system that will certainly run on architectures that

have not yet been invented.

Four major segments of Linux key marketplaces are:

§

Workload consolidation - One of the

capabilities of Linux is that it makes it very

easy to take distributed work and consolidate it in larger servers. Mainframes and some large servers

support hundreds of thousands of virtual machines, each one running one copy of

Linux.

§

Clusters – Linux performs very well when

connected in clusters (due to its horizontal scalability), like the big

supercomputing clusters existing in universities and research labs.

§

Distributed enterprise – The central

management of geographically distributed servers is among the chief growth

areas for Linux.

§

Appliances –Linux is found as an embedded

operating system in all kinds of new applications, like major

network servers, file and print

servers, and quite a number of them new kinds of information appliances. It’s

very cost effective, reliable and fast. In addition, the open standards facilitate their operations.

The first Microsoft’s product – in 1975 – was BASIC,

installed in microcomputers used by hobbyists. In 1980, IBM started to develop the

personal computer (PC) and invited Microsoft to participate in the project by creating

the operating system.

Microsoft bought, from a small company called Seattle Computer, a system called

Q-DOS, and used

it as a basis for MS-DOS, which became the operating system of choice, distributed by IBM as

PC-DOS. By 1983,

Microsoft announced their planning to bring graphical computing to the IBM PC

with a product called Windows. As Bill Gates explains, “at that time two of the personal

computers on the market had graphical

capabilities: The XEROX Star and the Apple Lisa. Both were expensive, limited in

capability, and built on proprietary hardware architectures. Other

hardware companies couldn’t license the operating systems to build compatible

systems, and neither computer attracted many software companies to develop

applications. Microsoft wanted to created an open standard and bring graphical

capabilities to any computer that was running MS-DOS”.

The first Microsoft’s product – in 1975 – was BASIC,

installed in microcomputers used by hobbyists. In 1980, IBM started to develop the

personal computer (PC) and invited Microsoft to participate in the project by creating

the operating system.

Microsoft bought, from a small company called Seattle Computer, a system called

Q-DOS, and used

it as a basis for MS-DOS, which became the operating system of choice, distributed by IBM as

PC-DOS. By 1983,

Microsoft announced their planning to bring graphical computing to the IBM PC

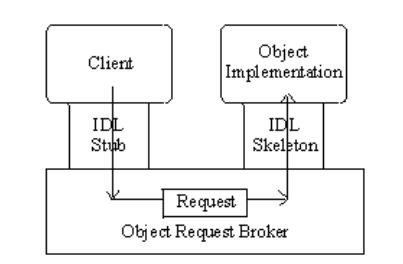

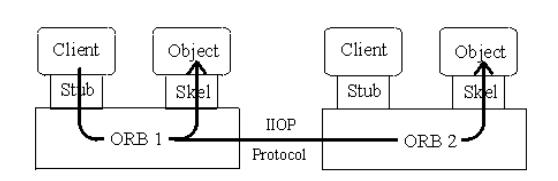

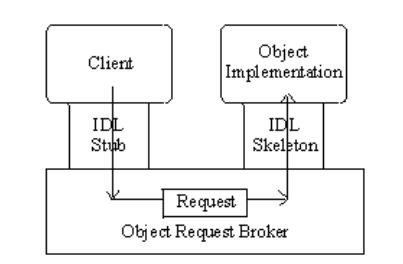

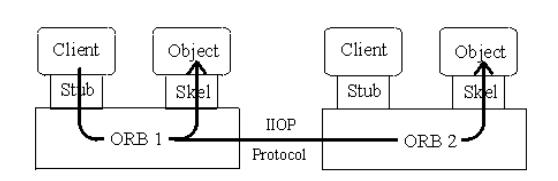

with a product called Windows. As Bill Gates explains, “at that time two of the personal